The $2 Billion Problem and the AI Promise

Welcome to the paradoxical world of modern pharmaceutical research and development. It is an arena of breathtaking scientific achievement, where our understanding of human biology deepens by the day, yet it is simultaneously an industry staring into an economic abyss. For decades, a shadow has been lengthening over the gleaming laboratories and bustling clinical trial centers of the biopharma world. This shadow has a name: “Eroom’s Law”. It is Moore’s Law—the principle of exponential progress in computing—spelled backward, and it describes a brutal, decades-long trend: despite revolutionary advances in technology, the number of new drugs approved per billion dollars of R&D spending has been steadily decreasing.

This is not a minor operational inefficiency; it is a systemic crisis. The journey of a single new medicine from a laboratory hypothesis to a patient’s hands is one of the most arduous and expensive undertakings in modern industry. The timeline is punishing, stretching across 10 to 15 years of relentless effort.2 The financial commitment is staggering, with the average cost to develop a new asset now exceeding $2.23 billion. And the odds of success? They are nothing short of abysmal. For every 20,000 to 30,000 compounds that show a glimmer of promise in the earliest stages of testing, only one will ultimately navigate the gauntlet of preclinical and clinical trials to receive regulatory approval. This colossal attrition rate has pushed the industry’s return on investment (ROI) to the brink, hitting a record low of just 1.2% in 2022 before a recent, tentative recovery began.

This is the $2 billion problem. It is a model of innovation that has become fundamentally unsustainable, a high-stakes gamble where the house almost always wins. The immense cost and risk have created a bottleneck that limits the number of new medicines reaching patients, leaving countless diseases with unmet needs. The pressure for a new paradigm is not merely about gaining a competitive edge; it is about restoring the economic viability of R&D and, by extension, the future of therapeutic innovation itself.

Into this challenging landscape steps a transformative force: artificial intelligence (AI) and its powerful subset, machine learning (ML). This is not just another incremental tool or a piece of faster lab equipment. AI represents a fundamental rewiring of the entire R&D engine. It signals a paradigm shift from a process reliant on serendipity, brute-force screening, and educated guesswork to one that is data-driven, predictive, and intelligent.6 Machine learning algorithms can sift through biological and chemical data at a scale and complexity that is simply beyond human cognition, identifying patterns, predicting outcomes, and generating novel hypotheses that can slash years and billions of dollars from the development lifecycle.

The potential economic impact is immense. The McKinsey Global Institute estimates that AI could generate up to $110 billion in annual economic value for the pharmaceutical industry. But the true promise of this technological revolution lies not just in improving the bottom line, but in breaking the chains of Eroom’s Law. Can AI turn the tide on R&D productivity? Can it increase the probability of success, reduce the cost of failure, and ultimately deliver more life-saving medicines to more people, more quickly? This report will argue that the answer is a resounding yes. We stand at the dawn of a new pharmaceutical era, one where the algorithmic elixir of machine learning is poised to recode the very future of how we discover and develop medicine.

The Old Guard: Deconstructing the Traditional Drug Development Pipeline

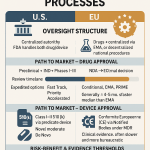

To fully grasp the revolutionary nature of machine learning, we must first appreciate the intricate, time-honored, and profoundly challenging process it seeks to transform. The traditional drug development pipeline is a linear and sequential marathon, a series of rigorously defined stages, each acting as a gatekeeper to the next. This structure, codified by regulatory bodies like the U.S. Food and Drug Administration (FDA) and the European Medicines Agency (EMA), is designed to ensure that any new therapeutic is both safe and effective before it reaches the public. While essential for patient safety, this rigid framework is also the source of the industry’s immense costs and protracted timelines.

The journey begins with a spark of an idea and ends, if successful, in a pharmacy, but the path between is long and fraught with peril. Let’s walk through the key stages of this conventional gauntlet.

Stage 1: Discovery and Development

This is the genesis of a new drug. It begins in the laboratory with basic research to understand a disease at its molecular level. Scientists work to identify a “target”—typically a protein, gene, or biological pathway implicated in the disease process. Once a target is identified, the hunt begins for a compound or “hit” that can interact with it in a therapeutically beneficial way. This often involves high-throughput screening (HTS), where thousands or even millions of chemical compounds are tested against the target. As noted, the odds are daunting; for every 20,000-30,000 compounds screened, only a handful will show enough promise to move forward.

Stage 2: Preclinical Research

Once a promising compound is discovered, it enters preclinical studies. This phase is conducted entirely in non-human systems, using laboratory techniques (in vitro) and animal models (in vivo) to answer fundamental questions about the drug’s safety and biological activity.5 Researchers gather critical data on pharmacology (how the drug affects the body), pharmacokinetics (how the body affects the drug), and toxicology. These mandatory studies are designed to build a safety profile and provide early evidence of efficacy, helping to determine if the drug is a viable candidate for human testing.

Stage 3: First Regulatory Filing (The IND/CTA)

If the preclinical data is promising, the drug’s sponsor—the pharmaceutical company—compiles a comprehensive package of information. This includes all manufacturing details, preclinical safety data, and a detailed plan for human studies. This package is submitted to a regulatory agency as an Investigational New Drug (IND) application in the United States or a Clinical Trial Application (CTA) in Europe. Regulators meticulously review the submission to ensure that the proposed study does not place human subjects at unreasonable risk. Only after the IND/CTA is approved can the drug move into the most critical phase: clinical trials.

Stages 4-6: Clinical Research (Phases I, II, and III)

This is where a potential drug is first tested in humans, a multi-phase process that is the longest, most expensive, and most failure-prone part of the entire journey.

- Phase I: The primary goal of Phase I is to assess safety and determine a safe dosage range. These trials typically involve a small number of participants (20-80), who are often healthy volunteers, although for certain diseases like cancer, patients may be enrolled.

- Phase II: If the drug is deemed safe in Phase I, it moves to a larger group of several hundred patients to evaluate its efficacy and further assess its safety profile. This phase is designed to see if the drug actually works on the intended disease.

- Phase III: This is the final, large-scale confirmation stage. Phase III trials involve several hundred to several thousand patients across multiple locations and are designed to definitively confirm the drug’s effectiveness, monitor side effects, compare it to commonly used treatments, and collect information that will allow it to be used safely. These “pivotal” trials are incredibly complex and costly, and their cycle times have recently been increasing, adding further pressure to R&D budgets.

Stage 7: Regulatory Filing for Approval (The NDA/BLA)

Assuming the drug successfully navigates all three phases of clinical trials and demonstrates a favorable risk-benefit profile, the sponsor submits a New Drug Application (NDA) or Biologics Licensing Application (BLA) to the FDA. This is an exhaustive application containing all the data gathered throughout the entire development process. FDA review teams then conduct a thorough examination to decide whether to approve the drug for marketing.

Stage 8: Post-Market Safety Monitoring

Even after a drug is approved and on the market, the journey is not over. The FDA and other regulatory bodies continue to monitor the drug’s safety through post-market surveillance programs. This allows them to track any unforeseen adverse events that may only become apparent when the drug is used by a much larger and more diverse population.

The fundamental architecture of this pipeline—a rigid, sequential gauntlet where each stage must be largely completed before the next begins—creates a system where the cost of failure is maximized at the latest stages. A drug that fails in a Phase III trial represents a decade or more of work and hundreds of millions, if not billions, of dollars in sunk costs. This linear structure also creates information silos; insights gained in late-stage clinical trials cannot easily feed back to optimize the initial discovery process for the next generation of drugs. It is this very structure—the linear dependency, the late-stage discovery of failure, and the siloed information flow—that makes the traditional model so vulnerable and ripe for the kind of disruption that machine learning promises.

The AI-Powered Paradigm Shift: From In Vitro to In Silico

The limitations of the traditional pipeline are not due to a lack of scientific rigor or human ingenuity. They are inherent to a process that relies on physical experimentation to navigate an almost unimaginably vast biological and chemical space. Machine learning offers a fundamentally different approach. At its core, ML is the practice of using algorithms to parse data, learn from it, and then make a determination or prediction about new data without being explicitly programmed for that specific task. It is this ability to learn complex, non-obvious patterns from data that allows AI to transform drug discovery, shifting the center of gravity from the wet lab to the computer—from in vitro to in silico.

This transition is not just about doing the same things faster; it is about doing things that were previously impossible. It represents a fundamental inversion of the traditional workflow. Instead of a “make-then-test” approach, where physical compounds are synthesized and then screened, AI enables a “predict-then-make” paradigm. Hypotheses are generated, molecules are designed, and properties are validated at a massive scale in silico, with precious laboratory resources reserved only for confirming the most promising, AI-vetted candidates. To understand how this is possible, it is essential to grasp the key types of machine learning being deployed in the pharmaceutical arsenal.

The AI Arsenal: A Primer on Core Machine Learning Techniques

While the field of AI is vast and complex, its application in drug discovery can be broadly understood through a few key categories of techniques, each suited to different tasks along the R&D value chain.6

- Supervised Learning: This is the workhorse of predictive modeling in pharma. In supervised learning, an algorithm is trained on a “labeled” dataset, where both the input data (e.g., the chemical structure of a molecule) and the desired output (e.g., whether it is toxic or not) are known. The model learns to map the inputs to the correct outputs by identifying the underlying patterns and relationships. Once trained, it can predict the output for new, unseen inputs. This makes it ideal for classification tasks (e.g., active vs. inactive compound) and regression tasks (e.g., predicting a specific binding affinity value).

- Unsupervised Learning: Where supervised learning relies on labeled data, unsupervised learning is used to find hidden structures and patterns within unlabeled data. There is no predefined “correct” answer for the algorithm to learn from. Instead, techniques like clustering are used for exploratory purposes. For instance, an unsupervised algorithm could group thousands of compounds based on their physicochemical properties, revealing novel classes of molecules, or it could analyze patient data to identify previously unknown disease subtypes.

- Deep Learning (DL): A specialized and highly powerful subfield of machine learning, deep learning utilizes multi-layered artificial neural networks—architectures inspired by the interconnected neurons of the human brain. These deep networks can learn significantly more complex and abstract patterns from data than traditional ML models. This capability is essential for making sense of the high-dimensional, intricate data common in biology, such as genomic sequences, protein structures, and high-resolution medical images.10

- Reinforcement Learning (RL): This paradigm involves algorithms that learn through interaction with an environment, much like a person learning a new skill through trial and error. The system, or “agent,” takes actions, observes the outcomes, and receives “rewards” or “penalties” based on those outcomes. Over time, it learns a strategy to maximize its cumulative reward. In drug discovery, RL is particularly well-suited for complex, sequential decision-making problems, such as optimizing a multi-step chemical synthesis pathway or designing an adaptive clinical trial protocol that can be modified in real-time based on patient responses.6

- Generative AI: This is perhaps the most revolutionary application of AI in the field. While other methods predict or classify existing data, generative AI creates new data. Models like Generative Adversarial Networks (GANs) and Variational Autoencoders (VAEs) are trained on vast datasets of known molecules and learn the fundamental rules of chemistry. They can then be used to generate entirely novel molecular structures from scratch, designed to have specific, desirable properties. This moves beyond simply screening existing libraries to actively inventing the next generation of medicines.

These techniques are not mutually exclusive; they are often combined into powerful hybrid models. A deep learning model might be used to extract features from a molecule’s structure, which are then fed into a supervised learning model to predict its toxicity. The table below provides a quick-reference guide, translating these abstract computational methods into their tangible functions within the R&D process.

| The AI Arsenal: Key Machine Learning Techniques in Drug Discovery | ||

| Category | Specific Models/Techniques | Primary Application in Drug Discovery |

| Supervised Learning | Random Forest, Support Vector Machines (SVMs) | Predicting ADMET properties, classifying compound activity, predicting drug-target interactions. |

| Unsupervised Learning | K-Means Clustering, Principal Component Analysis (PCA) | Identifying patient subgroups from clinical data, clustering molecules with similar properties, dimensionality reduction of omics data. |

| Deep Learning | Convolutional Neural Networks (CNNs), Recurrent Neural Networks (RNNs), Transformers | Analyzing medical images (pathology, radiology), predicting 3D protein structures (e.g., AlphaFold), processing sequential data like DNA or SMILES strings. |

| Reinforcement Learning | Q-Learning, Policy Gradients | Optimizing chemical synthesis pathways, designing adaptive clinical trial protocols, guiding de novo molecule generation toward desired properties. |

| Generative AI | Generative Adversarial Networks (GANs), Variational Autoencoders (VAEs), Diffusion Models | De novo design of novel small molecules and proteins, generating synthetic data to augment sparse datasets. |

This powerful toolkit is what enables the shift from the physical to the digital realm. It allows researchers to ask—and answer—questions at a speed and scale that were unimaginable just a decade ago. It is this computational horsepower, applied across the entire development pipeline, that is fueling the ongoing revolution in pharmaceutical research.

Dissecting the Revolution: ML’s Impact Across the R&D Value Chain

The true power of machine learning is not in a single application but in its ability to optimize and interconnect every link in the long chain of drug development. From the initial search for a biological target to the design of final-phase human trials, AI is being deployed to make the process faster, cheaper, and more likely to succeed. This section will take a deep dive into the specific ways ML is revolutionizing each critical stage of the R&D value chain.

Target Identification and Validation: Finding the “Why” of Disease

The journey of every drug begins with a single, crucial decision: which biological target to pursue. Choosing the right target—the specific gene or protein that drives a disease—is paramount. An incorrect target choice guarantees failure, regardless of how well-designed the subsequent drug may be. Traditionally, this process has been slow and laborious, relying on years of academic research to elucidate disease pathways. AI is changing this by transforming target identification from a process of slow deduction to one of rapid, data-driven inference.

The core advantage of AI here is its ability to integrate and synthesize vast, heterogeneous datasets that no human researcher could ever hope to process. Machine learning algorithms can analyze multi-omics data—including genomics (genetic variants associated with disease), proteomics (protein abundance and interactions), and transcriptomics (gene expression patterns)—to uncover subtle correlations between specific biological entities and disease states.11 This allows them to systematically prioritize potential targets based on the weight of the biological evidence.

This capability extends beyond numerical data. Natural Language Processing (NLP) models are now being deployed to mine the world’s repository of biomedical knowledge—millions of scientific publications, patent filings, and clinical trial reports. These models can read, understand, and connect information at a massive scale, identifying novel relationships between genes, diseases, and pathways that may have been overlooked by human researchers.11 This is augmented by the use of knowledge graphs, which create complex network maps of biological interactions, allowing AI to identify critical nodes that represent the most promising points for therapeutic intervention.

Perhaps the most celebrated breakthrough in this area is DeepMind’s AlphaFold, which was awarded a Nobel Prize for its revolutionary impact. AlphaFold uses deep learning to predict the three-dimensional structure of proteins from their amino acid sequence with astonishing accuracy.14 Since a protein’s function is dictated by its shape, knowing the precise 3D structure of a disease-related target is a monumental leap forward. It allows computational chemists to immediately begin designing drugs that can bind to it effectively, a process that previously could take years of painstaking laboratory work.

Finally, AI also plays a crucial role in validating these identified targets. Machine learning models can correlate a target’s expression levels with clinical data, such as medical imaging biomarkers or patient outcomes, to confirm its relevance to the disease phenotype. By providing a more robust, data-backed foundation for the entire R&D program, AI-driven target identification and validation significantly de-risks the most critical decision in drug discovery.

Hit Discovery and Lead Optimization: From a Haystack of Compounds to a Handful of Candidates

Once a target is validated, the next challenge is to find a molecule that can interact with it. This begins with “hit discovery,” the process of screening enormous libraries of chemical compounds to find one that shows the desired biological activity. This is followed by “hit-to-lead” (H2L) and “lead optimization,” where medicinal chemists painstakingly refine that initial hit, tweaking its structure to improve its properties and turn it into a viable drug candidate, or “lead”. This is a classic multi-parameter optimization problem: how do you increase a molecule’s potency without making it toxic? How do you improve its solubility without harming its ability to bind to the target?

This is where AI excels. AI-enhanced virtual screening can computationally evaluate libraries containing billions of virtual compounds in a fraction of the time and cost of physical high-throughput screening.11 Instead of physically testing every compound in the library, AI models predict which ones are most likely to be active, allowing researchers to focus their experimental efforts on a much smaller, more promising set of molecules.

In the crucial H2L phase, ML models are used to build sophisticated Structure-Activity Relationship (SAR) models. These models learn the complex, often non-intuitive rules that connect a molecule’s chemical structure to its biological effects. This allows them to predict multiple properties simultaneously—potency, selectivity, solubility, permeability, metabolic stability, and more. This is a game-changer for medicinal chemists, who traditionally have to optimize these properties through a slow, iterative process of trial and error. With AI, they can explore a vast chemical space in silico, evaluating thousands of potential modifications to a hit molecule and seeing the predicted impact on all key properties at once.

Leading platforms, such as Schrödinger’s molecular design suite, have embraced a “predict-first” approach. They combine highly accurate, physics-based simulations of molecular interactions with the speed and pattern-recognition power of machine learning. This allows R&D teams to confidently explore novel and complex molecular designs entirely on the computer, sending only the top-performing, AI-vetted candidates for physical synthesis. This inversion of the traditional workflow dramatically expands the pool of molecules that can be explored while simultaneously reducing the time and resources spent in the lab.

De Novo Drug Design: Inventing Molecules with Generative AI

Virtual screening and lead optimization are powerful tools for exploring known chemical space. But what if the ideal drug for a particular target has a structure that no one has ever synthesized or even imagined? This is the limitation that de novo drug design, powered by generative AI, aims to overcome. This approach represents a true paradigm shift, moving from simply searching for promising molecules to actively inventing them.

Generative AI models, such as Generative Adversarial Networks (GANs), Variational Autoencoders (VAEs), and more recently, Diffusion Models, are the engines of this revolution.19 These deep learning architectures are trained on massive datasets of existing molecules, such as the QM9 and GEOM-Drug databases. Through this training, they learn the fundamental grammar of chemistry—the rules of atomic bonding, valency, and three-dimensional structure.

Once trained, these models can be prompted to generate entirely new molecular structures that are chemically valid and possess specific, desirable properties. Researchers can essentially provide the model with a target product profile—for example, “generate a molecule that strongly binds to protein X, has low predicted toxicity, is orally bioavailable, and is easy to synthesize”—and the AI will generate novel candidates that fit the criteria.20 This allows researchers to explore vast, uncharted regions of chemical space, potentially discovering new classes of drugs with novel mechanisms of action. This is not science fiction; as we will see in a later section, multiple drug candidates designed by generative AI platforms have already advanced into human clinical trials, validating the power of this groundbreaking approach.14

ADMET Prediction: Foreseeing Failure to Ensure Success

One of the most heartbreaking and costly realities of traditional drug development is late-stage failure. A drug can show fantastic efficacy in early trials, only to be terminated in late-stage development because it turns out to have an unacceptable toxicity profile or poor pharmacokinetic properties. These properties are collectively known as ADMET: Absorption, Distribution, Metabolism, Excretion, and Toxicity. A failure due to poor ADMET represents a massive waste of time and resources.

Machine learning provides a powerful antidote to this problem by enabling a “fail fast, fail cheap” strategy. AI models can now predict a wide range of ADMET properties with a high degree of accuracy in silico, long before a molecule is ever synthesized or tested in an animal. This allows researchers to filter out compounds that are likely to fail due to safety or pharmacokinetic issues at the earliest possible stage of the discovery process.

Specialized platforms like ADMET-AI and ADMET Predictor® have been developed specifically for this purpose. These tools use advanced machine learning models, such as graph neural networks, which are particularly adept at learning from molecular structures.22 Trained on large, curated datasets of experimental ADMET data from public and private sources, these platforms can predict over 175 different properties. Key predictions include critical safety liabilities like hERG channel blockage (a predictor of cardiotoxicity), blood-brain barrier penetration (important for CNS drugs but undesirable for others), oral bioavailability, and general clinical toxicity.

The ability to get an early, computational read on a molecule’s likely ADMET profile is a cornerstone of the modern, AI-driven discovery workflow. It allows teams to de-risk their portfolios by prioritizing compounds with the highest probability of downstream success, dramatically improving the overall efficiency and productivity of the R&D engine. Of course, the reliability of these predictions is paramount, which has spurred a sub-field focused on rigorously benchmarking ADMET models using clean data and robust statistical hypothesis testing to ensure their predictions can be trusted.24

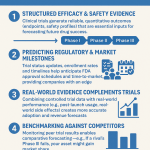

Clinical Trial Optimization: Redesigning Human Studies for the Digital Age

If a drug candidate successfully navigates the preclinical gauntlet, it faces its greatest challenge: clinical trials. This phase is the single largest driver of cost and failure in the entire development process. Traditional trials are often hampered by slow and inefficient patient recruitment, rigid and inflexible protocols, and a reactive approach to safety monitoring. Here too, AI is injecting a new level of data-driven precision, efficiency, and adaptability.

The revolution begins with patient recruitment. Finding the right patients for a trial is a major bottleneck. AI and ML algorithms can accelerate this process dramatically by analyzing massive datasets, including electronic health records (EHRs), genomic data, and even real-world data from social media, to identify eligible candidates with far greater speed and accuracy than manual methods.26 Studies have shown that AI tools like TrialGPT can reduce patient screening time by over 40% while matching the accuracy of human experts.

AI is also transforming trial design. Instead of relying on static, one-size-fits-all protocols, AI can simulate thousands of potential trial scenarios to optimize key parameters like sample size, dosage regimens, and study duration. More advanced techniques, such as reinforcement learning, are enabling the creation of “adaptive trials.” These are dynamic studies where the protocol can be adjusted in real-time based on incoming data, allowing researchers to more quickly identify effective doses, drop ineffective treatment arms, or focus on patient subgroups who are responding best.

Furthermore, AI models can enhance patient stratification and safety. By analyzing a patient’s baseline data, models can predict their likely response to a treatment or their risk of experiencing a particular adverse event.26 This allows for the selection of patients most likely to benefit and for enhanced safety monitoring for those at higher risk.

The potential impact of these interventions is staggering. Industry analyses suggest that the application of AI in clinical trials could lead to cost savings of up to 70% and reduce timelines by as much as 80%.28 While challenges remain, particularly around the need for more comprehensive data and better model interpretability , the trajectory is clear. AI is poised to redesign the clinical trial process from the ground up, making it more efficient, more patient-centric, and more likely to deliver clear, actionable results.

The most profound impact of AI is not just the acceleration of these individual stages, but their integration into a cohesive, intelligent system. A generative model can design a novel molecule, which is immediately screened by an ADMET prediction model. The predicted properties of that molecule can then inform the design of an optimized clinical trial, and the data from that trial can, in turn, be fed back to train and improve the initial generative and predictive models. This creates a powerful, virtuous cycle—a learning R&D ecosystem where each project makes the entire platform smarter. It is this interconnected feedback loop that is breaking down the traditional silos and truly heralding a new era of pharmaceutical innovation.

The Bottom Line: Quantifying the ROI of AI in Pharma

For business leaders and investors, the scientific elegance of machine learning is compelling, but the ultimate question is one of value. What is the tangible return on investment (ROI) for embracing this technological shift? The data emerging from the industry is painting a clear and powerful picture: AI is not just an efficiency tool; it is a formidable engine for value creation, poised to fundamentally reshape the financial landscape of pharmaceutical R&D.

The macro-economic projections are breathtaking. Some analyses suggest AI could generate between $350 billion and $410 billion in annual value for the pharmaceutical industry by 2025. Another report estimates that AI adoption could add a staggering $254 billion to the annual operating profits of pharmaceutical companies by 2030. These are not marginal gains; they represent a seismic shift in the industry’s economic potential.

This value is being driven by measurable improvements across three key dimensions: speed, cost, and, most importantly, the probability of success.

Radical Timeline Compression

Perhaps the most immediate and visible impact of AI is its ability to dramatically shorten development timelines. The traditional 5-6 year slog from target identification to a preclinical candidate can be compressed to just one year with AI-powered platforms. The real-world success of Insilico Medicine with its IPF drug candidate, which went from a novel, AI-identified target to the start of a Phase I clinical trial in under 30 months, serves as a powerful proof point for this acceleration. Across the board, studies suggest AI has the potential to shave up to four years off the total drug development timeline. In an industry where every day of patent life is worth millions, this speed is a profound competitive advantage.

Substantial Cost Reduction

Shortening timelines naturally leads to lower costs, but AI’s financial benefits go much further. By enabling the “fail fast, fail cheap” strategy through predictive modeling, AI helps companies avoid pouring hundreds of millions of dollars into candidates that are destined to fail. Estimates suggest that AI can reduce preclinical development costs by 25-50%. The impact on the clinical phase, the most expensive part of the journey, is even more significant. By optimizing trial design and accelerating patient recruitment, AI could lead to cost savings of up to 70% per trial. One study calculated that the total savings could amount to an incredible $26 billion over the life of a drug’s development.

The Ultimate Metric: Increased Probability of Success

While speed and cost savings are critical, the most transformative impact of AI lies in its potential to improve the dismal success rates that have plagued the industry. By enabling better target selection, designing more drug-like molecules with superior safety profiles, and recruiting more appropriate patient populations for trials, AI is fundamentally de-risking the R&D process.

The success rate of the 21 AI‐developed drugs that have completed Phase I trials as of December 2023 is 80%–90%, significantly higher than ~40% for traditional methods.

This statistic is perhaps the single most important piece of evidence for the power of AI in drug discovery. A doubling of the success rate in early-stage clinical trials represents a monumental shift in the risk profile of pharmaceutical investment. It means that capital is being deployed more efficiently, more promising candidates are advancing, and the overall productivity of the R&D pipeline is being fundamentally enhanced. This is a leading indicator of the recovery in the industry’s overall R&D ROI, which has climbed from its 2022 low of 1.2% to a more sustainable 5.9% in 2024.

The industry has taken notice. Investment in AI is exploding, with projections showing a leap from $4 billion to $25 billion between 2025 and 2030. An overwhelming 95% of pharmaceutical companies report that they are now actively investing in AI capabilities. The table below provides a stark, at-a-glance comparison of the traditional R&D gauntlet versus the new, AI-accelerated paradigm.

| The Drug Discovery Gauntlet: Traditional vs. AI-Accelerated Timelines & Costs | |||

| R&D Stage | Traditional Benchmark | AI-Accelerated Benchmark | Cost Impact |

| Target ID to Preclinical Candidate | 5–6 years 28 | ~18–30 months | Preclinical cost reduction of 25-50% |

| Preclinical Phase | ~3–6 years | Significant compression within the accelerated timeline | One estimate suggests a total savings of $26 billion per drug |

| Clinical Trials (Overall) | ~6–7 years | Potential timeline reduction of up to 80% | Clinical trial cost savings of up to 70% |

| Total Development Time | 10–15 years | Potential reduction of 4 years or more | Average traditional cost >$2.2B per drug |

The financial case for AI is compelling, but the true ROI extends beyond a single project’s P&L. The greatest value comes from a “network effect” where initial, often costly, investments in data infrastructure and integrated platforms unlock compounding returns over time. Building a massive, high-quality, proprietary dataset—like Recursion’s 60+ petabyte “Map of Biology” —is a formidable upfront investment. However, this data asset becomes a powerful competitive moat. It enables the creation of superior predictive models that competitors without the data simply cannot replicate. As the drug candidates generated by these models advance through clinical trials, they produce more high-quality, structured clinical data, which is then fed back into the platform. This makes the initial data asset even more valuable and the predictive models even more accurate for the next discovery program. The ROI is not just about saving money on one project; it is about building an R&D engine that gets exponentially smarter, more predictive, and more efficient over time. This is the true economic power of the AI revolution.

From Theory to Therapy: AI-Driven Success Stories

The promise of AI in drug discovery is no longer a theoretical projection; it is a clinical reality. A growing cohort of pioneering “AI-native” biotechnology companies are successfully translating computational predictions into tangible therapeutic candidates that are advancing through human trials. These success stories provide the crucial proof that AI-driven approaches can deliver on their promise of speed, efficiency, and novelty. By examining the strategies and achievements of these front-runners, we can see the revolution in action.

Insilico Medicine: The End-to-End Generative AI Pioneer

Hong Kong-based Insilico Medicine has become a flagship example of the power of an end-to-end generative AI platform. Their system, Pharma.AI, is comprised of three integrated components: PandaOmics for novel target discovery, Chemistry42 for de novo small molecule design, and InClinico for predicting clinical trial outcomes.34 Their strategy is to solve the entire early discovery problem

in silico, from identifying a new biological target to designing a novel drug to hit it.

Their most prominent success story is ISM001-055 (Rentosertib), a first-in-class drug candidate for Idiopathic Pulmonary Fibrosis (IPF), a devastating and progressive lung disease.15 The story of its development is a case study in AI-driven acceleration:

- Novel Target Discovery: Using PandaOmics to analyze a wealth of biological data, Insilico identified a completely new target for fibrosis that had not been previously pursued.15

- Generative Design: Their Chemistry42 platform then generated novel small molecules specifically designed to inhibit this new target.

- Record-Breaking Timeline: The entire process, from initiating the target discovery program to nominating a preclinical candidate, took just 18 months at a preclinical cost of around $2.6 million.

- Clinical Validation: The drug candidate successfully completed a Phase 0 microdosing study and entered a Phase I clinical trial in under 30 months from the project’s inception. This timeline is a fraction of the 5-6 year industry average.

- Current Status: As of 2024, Rentosertib has reported positive topline results from its Phase 2a clinical trial, showing a favorable safety profile and encouraging signs of efficacy in IPF patients.36

Insilico’s success demonstrates the viability of a fully integrated, generative AI approach to not only accelerate the development process but also to tackle novel biology, a key driver of true innovation.

Exscientia: The Architect of AI-Powered Precision Medicine

U.K.-based Exscientia has pioneered a different, though equally powerful, AI strategy centered on precision medicine. Their philosophy is to design the right drug for the right patient from the very beginning. Their Centaur AI™ platform is designed to rapidly generate highly optimized molecules that meet the complex profile needed for a successful clinical candidate, cutting the typical 4.5-year discovery timeline down to just 12-15 months.

A key example from their pipeline is EXS-21546, a highly selective A2A receptor antagonist co-developed with Evotec for applications in oncology.39

- Efficient Design: The molecule was identified in just 9 months after the synthesis and testing of only 163 compounds—a remarkably small number compared to the thousands or millions screened in traditional campaigns.

- Patient-Centric Approach: A core part of Exscientia’s strategy for EXS-21546 is the development of a multi-gene biomarker signature. This allows them to prospectively identify cancer patients whose tumors have a high “adenosine burden” and are therefore most likely to respond to the drug.

- Clinical Advancement: The drug successfully completed a Phase 1a study in healthy volunteers and has advanced into a Phase 1/2 trial in patients with advanced solid tumors.39

Exscientia’s approach highlights how AI can be used not just for molecular design, but for creating a more holistic, patient-centric development strategy that integrates biomarker discovery from the outset to improve the probability of clinical success.

Recursion Pharmaceuticals: The “Data-First” Industrialist

Recursion Pharmaceuticals has adopted a unique and ambitious strategy: to create an insurmountable data advantage. Their core belief is that with a large enough, high-quality, and relatable dataset, machine learning can decode biology at an unprecedented scale. Their platform, the Recursion OS, is less a single tool and more an industrialized factory for biological data generation.42

- Massive-Scale Experimentation: At the heart of the Recursion OS are automated wet labs that use robotics and computer vision to run up to 2.2 million cellular experiments per week.

- Building “Maps of Biology”: In these experiments, human cells are perturbed (e.g., by knocking out genes with CRISPR or introducing chemical compounds), and high-resolution images are taken to capture the resulting “phenotype.” This data is used to build massive, proprietary “Maps of Biology and Chemistry.”

- A Data Moat: To date, Recursion has generated over 60 petabytes of proprietary biological and chemical data—one of the largest datasets of its kind in the world. This data is their core asset.

- Powerful Infrastructure: To process this data, Recursion has built one of the most powerful supercomputers in the biopharma industry, BioHive-2, in collaboration with NVIDIA.

- Clinical Pipeline: This data-first approach has yielded a robust pipeline of potential medicines, with multiple programs in clinical stages for treating rare genetic diseases like familial adenomatous polyposis and various forms of cancer.

Recursion’s strategy demonstrates a different path to success. While Insilico focuses on the power of generative algorithms and Exscientia on patient-centric design, Recursion is betting that the company with the best map of biology—built from the largest and best dataset—will ultimately win the race to discover new medicines.

A Thriving and Diverse Ecosystem

Beyond these three leaders, a vibrant ecosystem of AI-driven biotechs is rapidly maturing. Companies like BenevolentAI are using their platform to analyze complex biological data, famously identifying an existing drug, baricitinib, as a potential treatment for COVID-19 and advancing AI-discovered candidates for diseases like ALS. Atomwise is leveraging its AtomNet platform for structure-based drug design, signing major collaboration deals with pharmaceutical giants like Sanofi. Meanwhile, Google DeepMind’s spin-off, Isomorphic Labs, has inked massive deals with Eli Lilly and Novartis to apply its next-generation AI models to drug discovery.

The evidence is clear and growing. The number of AI-developed drug candidates entering clinical stages has grown exponentially, from just 3 in 2016 to 67 in 2023. These success stories reveal that there is no single “best” way to apply AI. The competitive landscape is being shaped by a diversity of powerful strategies, from end-to-end generative platforms and precision-medicine-focused design to the brute-force industrialization of data generation. The ultimate winners will be those whose strategies prove most effective at navigating the final, most difficult hurdles of late-stage clinical trials and bringing truly innovative medicines to market.

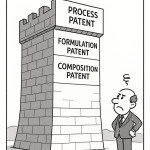

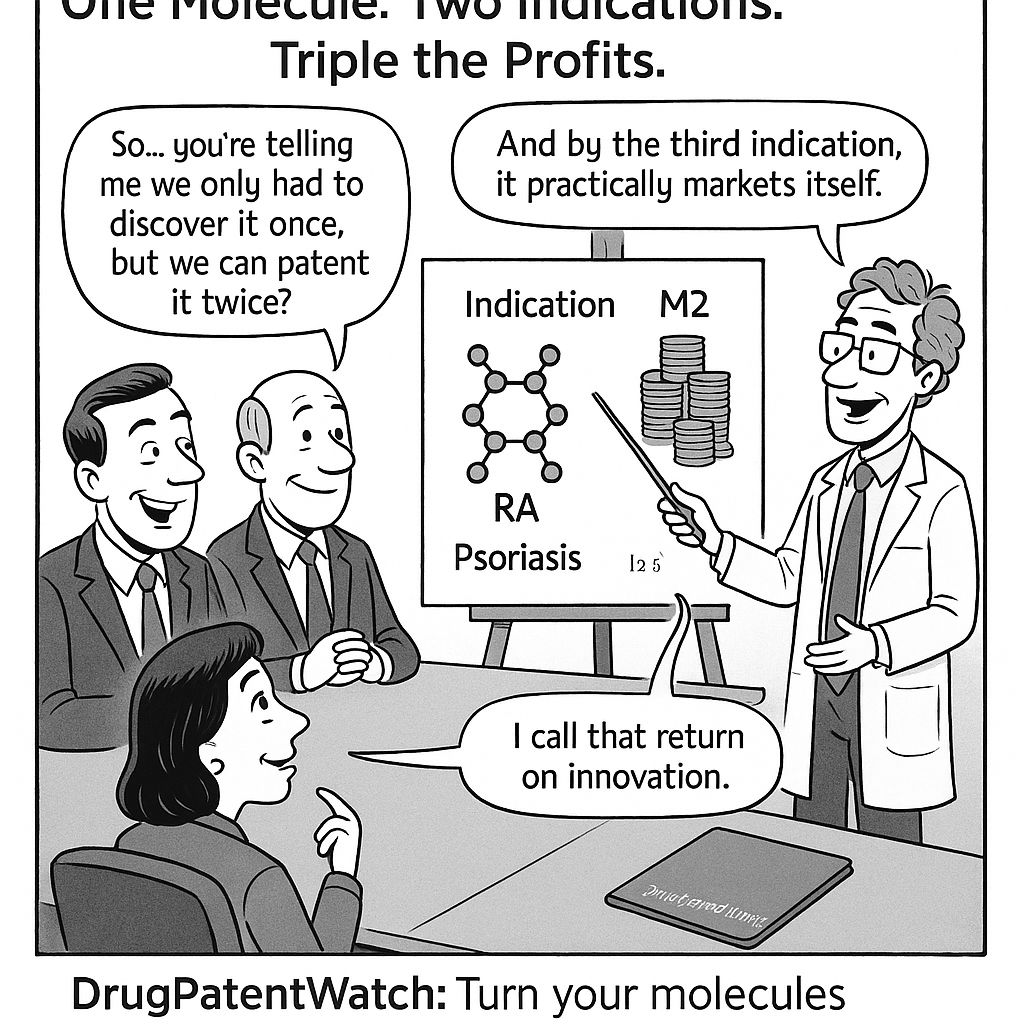

The Competitive Edge: AI, Patents, and Strategic Intelligence

In the hyper-competitive pharmaceutical landscape, innovation is currency, and information is power. Machine learning is rapidly evolving from a powerful R&D tool into a formidable competitive weapon. Companies that effectively harness AI for strategic intelligence and navigate the complex, evolving landscape of intellectual property (IP) will not only accelerate their own pipelines but will also be able to anticipate market shifts and outmaneuver their rivals.

AI as a Competitive Intelligence Engine

The sheer volume of data generated by the global pharmaceutical industry—from patent filings and clinical trial registrations to scientific publications and financial reports—is overwhelming. AI provides the means to systematically monitor and analyze this torrent of information to extract actionable competitive intelligence (CI).

AI-powered platforms can be trained to track competitors’ R&D priorities, monitor the progress of their clinical trial pipelines, flag new regulatory filings, and analyze M&A activity in real-time.47 Using NLP, these systems can digest vast quantities of unstructured text from news articles, earnings call transcripts, and social media, gauging sentiment and identifying emerging strategic trends. This capability allows companies to move beyond reactive analysis and toward a predictive understanding of the competitive landscape. They can identify “white spaces”—therapeutic areas with high unmet need and low competitive density—and spot promising licensing or acquisition opportunities before they become widely known.

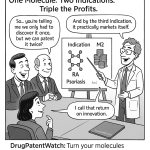

The Strategic Role of Patent Databases

Central to any pharmaceutical CI strategy is the analysis of patent data. Patent databases are a treasure trove of information, revealing competitors’ research paths, technological capabilities, and future commercial intentions. Platforms like DrugPatentWatch provide a critical service by offering fully integrated databases of drug patents, ongoing litigation, patent expiration dates, and information on generic and biosimilar challengers. Strategic leaders use this intelligence to inform portfolio management, anticipate the loss of market exclusivity for branded drugs, assess the track record of patent challengers, and gain deep insights into the R&D strategies of their competitors.49

AI is amplifying the power of this analysis. Machine learning algorithms can be applied to patent databases to perform large-scale text analysis, uncovering latent connections between different patents and identifying emerging technological trends that might not be obvious from manual review. In a striking example of this capability, the technology firm Quytech developed a generative AI-powered tool specifically designed to analyze pharmaceutical patent documents and research papers to instantly suggest alternative molecules and formulations that could be used to legally circumvent existing patents, drastically reducing research timelines for generic and “me-too” drug developers.

The AI Inventorship Dilemma: A New IP Frontier

As AI’s role shifts from an assistive tool to a creative partner in discovery, it is creating unprecedented challenges for the legal framework of intellectual property. A critical question has emerged: if an AI system designs a novel drug molecule, who—or what—is the inventor?

Current patent law in major jurisdictions like the United States and the United Kingdom is unequivocal: an inventor must be a human being. The landmark Thaler v. Vidal case in 2022, which sought to name the AI system DABUS as an inventor, was firmly rejected on these grounds. However, recognizing the reality of modern R&D, the U.S. Patent and Trademark Office (USPTO) issued crucial guidance in 2024. It clarified that an invention that was created with the assistance of AI is still patentable, provided that one or more humans made a “significant contribution” to the conception of the invention.

This guidance has profound strategic implications. To secure their IP, companies must now meticulously document the human-AI interaction at every stage of the discovery process. They need to prove that human scientists were actively involved in defining the problem, curating the training data, interpreting the AI’s output, and selecting the final drug candidate. This has also created a critical strategic choice: should a company patent an AI-discovered drug, which requires disclosing details about the AI methodology and data, or should they protect their proprietary AI algorithms as a trade secret, which offers less protection for the final molecule itself?. Companies like Relay Therapeutics are pursuing a hybrid approach, patenting their drug candidates while keeping the underlying simulation platforms confidential.

Big Pharma’s Response: The “Buy and Partner” Strategy

Faced with this rapidly evolving landscape, the industry’s largest players are aggressively pursuing a “buy and partner” strategy to integrate AI capabilities. Rather than building everything from the ground up, they are forming deep, strategic collaborations with AI-native biotechs and major technology companies. This creates a new, symbiotic ecosystem where Big Pharma provides the scale, deep biological and clinical data, and late-stage development expertise, while the AI companies provide the cutting-edge platforms and computational talent. The table below provides a snapshot of how some of the top pharmaceutical companies are strategically embracing the AI revolution.

| AI Strategy Snapshot: How Top Pharma is Embracing the Revolution | |||

| Company | CEO/Leadership View on AI | Key AI Initiatives/Platforms | Strategic Focus |

| Pfizer | Focused on using AI to collapse timelines and achieve massive efficiency gains. “While competitors are six months into molecule identification, Pfizer is already moving to the next phase”. | Partnership with AWS for generative AI across manufacturing and research; partnership with Flagship Pioneering’s Logica platform. | Accelerating preclinical discovery from years to 30 days; boosting manufacturing throughput; reducing clinical documentation time by 80%.53 |

| Roche | Embracing a “lab in a loop” concept where AI models are trained on lab/clinic data to generate predictions that are then experimentally tested, creating a virtuous cycle of learning. | Genentech’s “lab in a loop” initiative; collaborations with NVIDIA for accelerated computing and AWS for data processing. | Using generative AI for drug discovery and development; optimizing antibody design; selecting neoantigens for personalized cancer vaccines.55 |

| Merck | Pursuing an enterprise-wide “AI360” strategy to innovate with AI, scale through AI, and enable the future of AI via their materials science division. | Internal generative AI platform for clinical document creation; ranked #2 in the Pharma AI Readiness Index.57 | Accelerating drug discovery, development, and manufacturing; boosting patient adherence with digital health platforms. |

| AbbVie | Focused on data convergence, centralizing vast internal and external data sources to empower scientists with AI/ML tools to “connect the dots”. | The AbbVie R&D Convergence Hub (ARCH), an industry-leading analytics platform connecting over 200 data sources. | Finding new drug targets; optimizing clinical trial site selection; developing precision medicines; using generative AI for protein design.59 |

| Novartis | CEO Vas Narasimhan emphasizes that while AI can accelerate many steps, its true impact on designing better medicines will become clear in the next 5-7 years. Fostering a “curious and unbossed” culture to pioneer new technologies. | Significant internal investments in AI and data science; major collaboration with Isomorphic Labs. | Pioneering new technology platforms (cell, gene, RNA therapies) and using AI as an enabling technology across the portfolio.61 |

This flurry of partnerships highlights a critical truth about the new competitive landscape. The innovation ecosystem is no longer just about who has the best chemists; it is about who controls the best data and the smartest algorithms. As these collaborations deepen, the ownership and governance of data and the resulting intellectual property will become the central battleground. A 2025 lawsuit between BioNTech and Nucleai over the IP rights to insights generated from an AI model trained on shared data is an early tremor of the legal earthquakes to come. The companies that will lead the next decade of pharmaceutical innovation will be those who are not only scientifically advanced but also legally and strategically masterful in navigating this new world of collaborative, data-driven discovery.

Navigating the Gauntlet: Overcoming Key Challenges in AI Implementation

For all its transformative potential, the path to integrating AI into pharmaceutical R&D is not a simple plug-and-play operation. It is a journey fraught with significant technical, cultural, and regulatory challenges that can stall progress and undermine the value of even the most sophisticated algorithms. Acknowledging and strategically addressing these hurdles is crucial for any organization looking to move beyond pilot projects and achieve true, scalable impact. Three interconnected challenges stand out as the primary barriers to widespread adoption: the data dilemma, the “black box” conundrum, and the regulatory labyrinth.

The Data Dilemma: Quality, Accessibility, and Standardization

The single most critical axiom in the world of machine learning is “garbage in, garbage out.” An AI model is only as good as the data it is trained on, and this represents the greatest vulnerability for AI in drug discovery. Biomedical data is notoriously challenging. It is often:

- Noisy and Sparse: Experimental data is subject to inherent biological variability and measurement error. For many areas, especially rare diseases or novel targets, high-quality data is simply scarce, which can lead to models that “overfit” to the small amount of available data and fail to generalize to new situations.

- Fragmented and Siloed: In many large pharmaceutical companies, data is trapped in disparate, legacy systems across different departments. R&D, clinical, and manufacturing data may be stored in proprietary formats that are incompatible with one another, making the creation of a unified, analysis-ready dataset a monumental data engineering challenge.64

- Lacking Negative Data: The scientific publishing world has a strong bias towards positive results. Experiments that “fail” are rarely published. This starves ML models of crucial “negative data,” which is just as important as positive data for learning the true boundaries of a predictive problem.

- Inaccessible: Even when data exists, it may be difficult to access due to intellectual property rights, patient privacy concerns (governed by regulations like HIPAA and GDPR), or a simple lack of incentives for organizations to share their valuable data assets.3

This lack of high-quality, standardized, and accessible data is consistently cited by industry experts and government bodies as the primary obstacle hindering the adoption and impact of machine learning in drug development.3

The “Black Box” Conundrum: Interpretability vs. Accuracy

A frustrating paradox exists at the heart of modern AI: often, the most powerful and accurate models are also the least transparent. Deep learning models, with their millions of interconnected parameters, can function as “black boxes.” They can take an input (like a molecule’s structure) and produce a highly accurate output (a toxicity prediction), but it can be difficult or impossible to understand the internal logic or biological reasoning that led to that prediction.15

This lack of interpretability poses a major problem in a field that is built on the scientific method.

- Erodes Trust: How can a medicinal chemist trust an AI’s suggestion to synthesize a novel molecule if they cannot understand the rationale behind its design? How can a clinician trust an AI-driven diagnostic if the model cannot explain its conclusion? This opacity is a significant barrier to adoption by the very experts the tools are meant to help.70

- Hinders Scientific Discovery: The goal of AI is not just to get the right answer, but to help us understand the “why.” An uninterpretable model might correctly predict a drug-target interaction, but it does not advance our fundamental understanding of the underlying biology.

- Creates Regulatory Hurdles: Regulatory agencies like the FDA require clear, evidence-based justifications for a drug’s safety and efficacy. Submitting a drug for approval based on the output of an inscrutable “black box” model is a non-starter.63

To address this, the field of Explainable AI (XAI) has emerged, developing techniques like LIME (Local Interpretable Model-agnostic Explanations) and SHAP (SHapley Additive exPlanations) that aim to provide insights into why a model made a particular decision.71 However, a vigorous debate is ongoing. Some experts, like Professor Cynthia Rudin, argue that for high-stakes decisions like those in medicine, we should avoid trying to “explain” a black box after the fact and instead focus on creating models that are inherently

interpretable from the ground up, even if it means a slight trade-off in predictive accuracy.72

The Regulatory Labyrinth: Charting a Course with the FDA

The dynamic, complex, and rapidly evolving nature of AI presents a unique challenge for regulatory bodies tasked with ensuring public safety. How do you create a regulatory framework for a technology that can learn and change over time?

The FDA has acknowledged the surge in drug development submissions that incorporate AI components and is actively working to establish clear guidelines. In a landmark move, the agency released its first draft guidance on the use of AI in drug development in January 2025.75

The centerpiece of this proposed guidance is a “risk-based credibility assessment framework.” The level of scrutiny and validation required for an AI model will depend on its specific Context of Use (COU) and the potential risk associated with an incorrect output.79 An AI model used for internal discovery research would face less scrutiny than one used to determine the final dose for a pivotal Phase III trial.

Key issues that the FDA and other regulators are focused on include:

- Data Integrity and Bias: Ensuring the data used to train and validate models is relevant, reliable, and representative of the intended patient population.

- Model Transparency and Interpretability: As discussed, regulators need to understand how a model works to trust its outputs.

- Uncertainty Quantification: The ability to accurately measure and report the level of confidence or uncertainty in a model’s predictions.

- Algorithmic Drift: Managing the risk that a model’s performance may degrade or change over time as it is exposed to new data.75

The FDA is strongly encouraging sponsors to engage with the agency early and often when planning to use AI in their regulatory submissions to navigate these complex issues collaboratively.

These three challenges—data, interpretability, and regulation—are not independent problems; they are deeply intertwined. The “black box” issue is a primary concern for regulators. The FDA’s proposed solution requires sponsors to provide comprehensive documentation on the data used to train their models, directly linking the regulatory challenge back to the data challenge. To satisfy regulators, companies must first establish robust data governance and quality control. At the same time, XAI tools are critical for both diagnosing potential biases in the training data and providing the transparency that regulators demand. Successfully implementing AI at scale requires a holistic strategy that addresses these interconnected challenges in a unified way.

The Next Frontier: Charting the Future of AI in Drug Discovery

The current applications of AI in drug discovery, while revolutionary, are merely the first wave of a much larger technological tsunami. As computational power increases, algorithms become more sophisticated, and our ability to generate high-quality biological data grows, we are moving toward a future of pharmaceutical R&D that looks more like science fiction than today’s laboratories. Three converging trends are shaping this next frontier: the rise of self-driving labs, the dawn of quantum machine learning, and the pinnacle of personalization in medicine.

The Rise of Self-Driving Labs: Automating the Scientific Method

Imagine a laboratory that never sleeps. A facility where an AI is the lead scientist, designing experiments based on a high-level research goal. Robotic arms and automated instruments then execute these experiments with perfect precision. The resulting data is instantly collected, analyzed, and fed back to the AI, which learns from the outcome and immediately designs the next, more intelligent experiment. This is the vision of the “self-driving lab”—a fully autonomous, closed-loop system for scientific discovery.35

This is not a distant dream; it is happening now. Research institutions like Argonne National Laboratory and the University of Sheffield, along with companies like Kebotix, are building and operating these advanced platforms.84 Analogous to self-driving cars, these labs use a combination of AI “brains” and robotic “hands” to navigate the complex landscape of chemical and materials discovery.

The impact on research productivity is profound. By operating 24/7 and optimizing experiments in real-time, self-driving labs can accelerate discovery cycles from months or years down to mere days or even hours.35 Recent breakthroughs have demonstrated systems that can collect experimental data at least 10 times faster than previous automated techniques, allowing the AI to learn more quickly and hone in on optimal solutions with unprecedented speed. This not only accelerates progress but also makes research more sustainable by dramatically reducing the amount of chemical waste generated. While significant challenges remain in software orchestration and the integration of diverse instruments 88, the trajectory is clear. Self-driving labs are poised to industrialize the scientific method itself, transforming the very nature of how research is conducted.

The Quantum Leap: When AI Meets Quantum Computing

While today’s supercomputers are incredibly powerful, they still struggle to accurately simulate the complex quantum mechanical interactions that govern how molecules behave. This is a fundamental barrier in drug discovery, as predicting a drug’s binding affinity or its precise mechanism of action requires understanding these interactions perfectly. Quantum computing, a new paradigm of computation that leverages the principles of quantum mechanics, promises to shatter this barrier.

The synergy between AI and quantum computing is poised to be one of the most powerful technological combinations of the 21st century. As one expert put it, “AI provides the brains and quantum computing provides the power”. The emerging field of Quantum Machine Learning (QML) aims to develop algorithms that run on quantum computers, potentially solving certain types of problems exponentially faster than any classical computer ever could.92

In drug discovery, the primary application will be to perform molecular simulations with near-perfect accuracy. An AI model could propose a novel drug candidate, and a quantum computer could then simulate its interaction with a target protein at the subatomic level, providing a definitive prediction of its efficacy and potential side effects. This could enable researchers to tackle previously “undruggable” targets and could, in theory, reduce certain aspects of the R&D timeline from years to weeks. This powerful fusion is already being explored, with researchers at the University of Toronto and Insilico Medicine recently demonstrating how a quantum-enhanced algorithm could identify potential inhibitors for a notorious cancer-driving protein.36

The Pinnacle of Personalization: AI-Driven Precision Medicine

The ultimate goal of medicine is not just to treat diseases, but to treat individual patients. The current “one-size-fits-all” model of drug development, where a single drug is designed for a broad patient population, is a blunt instrument. AI is enabling a future of hyper-personalized, precision medicine, where therapies are tailored to a patient’s unique genetic makeup, lifestyle, and environmental exposures.94

This is already a reality in fields like oncology, where AI algorithms analyze the genomic sequence of a patient’s tumor to identify specific mutations and recommend the targeted therapy most likely to be effective.97 But this is just the beginning. The future of personalized medicine, powered by AI, includes:

- Multimodal Analysis: AI platforms will integrate a patient’s complete biological profile—genomics, proteomics, metabolomics, clinical history, and even data from wearable devices—to create a holistic digital picture of their health.95

- Predictive Response Modeling: Based on this multimodal data, AI will predict with high accuracy how an individual patient will respond to a wide range of potential treatments, allowing clinicians to select the optimal therapy and dosage from the outset.94

- “Digital Twins”: The concept of creating a virtual, computational model of an individual patient is gaining traction. These “digital twins” could be used to simulate the progression of a disease and test the effects of various treatments in silico before ever administering a drug to the actual patient.

- Real-Time Adaptive Therapies: AI will be integrated directly into therapeutic devices, such as smart insulin pumps that continuously monitor glucose levels and dynamically adjust insulin delivery in real-time for diabetic patients.

These future trends are not developing in isolation; they are converging toward a single, powerful vision for the future of healthcare: an autonomous, personalized medicine factory. In this future, a patient’s unique biological data could be collected and fed into a quantum-enhanced AI model. This model would then design a bespoke drug molecule specifically tailored to that individual’s disease. The design would be sent to a self-driving lab, which would autonomously synthesize, test, and formulate the personalized medicine. This entire workflow, from patient data to personalized pill, could one day be largely automated. This is the ultimate endpoint of the AI revolution in pharma—the industrialization of bespoke medicine creation, delivering the right drug, for the right patient, at the right time.

Conclusion: The Dawn of a New Pharmaceutical Era

The pharmaceutical industry stands at a historic inflection point. The traditional model of drug discovery, a testament to a century of scientific progress, has reached the limits of its economic and operational efficiency. Eroom’s Law has cast a long shadow, threatening the sustainability of an R&D engine that has become too slow, too costly, and too prone to failure. The need for a new paradigm has never been more urgent.

Machine learning has emerged as that paradigm. It is not an incremental improvement or a fleeting technological trend; it is a fundamental, disruptive force on par with the discovery of antibiotics or the advent of biologics. AI is systematically deconstructing the old, linear pipeline and reassembling it into an intelligent, interconnected, and predictive ecosystem. From identifying novel disease targets in the silent depths of genomic data to designing entirely new molecules in the digital realm of a computer, ML is compressing timelines, slashing costs, and, most critically, dramatically increasing the probability of success.

The evidence is no longer speculative. AI-discovered drugs are now in human trials, demonstrating superior success rates and validating the power of this new approach. An entire ecosystem of AI-native biotechs has risen, pioneering diverse and powerful strategies that are challenging the industry’s incumbents. In response, Big Pharma is engaging in a flurry of partnerships and acquisitions, signaling a universal acknowledgment that the future of drug discovery is inextricably linked with artificial intelligence.

Of course, the path forward is not without its obstacles. The formidable challenges of data quality, model interpretability, and regulatory adaptation must be navigated with strategic foresight and collaborative effort. The “garbage in, garbage out” principle remains the Achilles’ heel of any AI system, demanding a renewed, industry-wide focus on data governance and standardization. The “black box” nature of complex models requires a commitment to developing explainable and interpretable AI to build trust with scientists, clinicians, and regulators. And the evolving regulatory landscape necessitates a proactive and transparent dialogue between industry and agencies like the FDA to chart a clear and safe path for innovation.

Yet, these challenges are not insurmountable. They are the birth pangs of a new era. Looking ahead, the convergence of AI with robotics in self-driving labs and the immense potential of quantum computing promise to accelerate this revolution even further, pushing us toward the ultimate goal of truly personalized medicine. The companies that will thrive in this new landscape will be those that do more than simply adopt new software. They will be the ones that fundamentally transform their culture to one of data-centricity, that build agile organizations capable of bridging the gap between biology and computer science, and that foster a deep, symbiotic collaboration between human expertise and artificial intelligence. The algorithmic elixir is here, and it is recoding the future of medicine. The race to master it has already begun.

Key Takeaways

- The Industry is at a Tipping Point: Traditional drug R&D is economically unsustainable, with costs exceeding $2.23 billion per drug and failure rates above 99%. AI is not just an efficiency tool but a necessary paradigm shift to solve this crisis.

- AI Impacts the Entire R&D Value Chain: Machine learning is being applied at every stage, from AI-driven target identification (e.g., AlphaFold) and de novo molecular design (generative AI) to predictive ADMET analysis and the optimization of clinical trial design and patient recruitment.

- The ROI is Tangible and Compelling: AI is proven to dramatically shorten timelines (e.g., from 5-6 years to under 2 for preclinical work), reduce costs (up to 70% in clinical trials), and significantly increase success rates (Phase I success rates have doubled to 80-90% for AI-discovered drugs).

- Success Stories Provide Real-World Proof: Companies like Insilico Medicine, Exscientia, and Recursion Pharmaceuticals have successfully advanced AI-discovered drugs into human clinical trials, each demonstrating a unique and powerful strategic approach to AI-driven discovery.

- Data is the New Competitive Moat: The primary challenge and greatest opportunity in AI-driven pharma is data. Companies that can generate, curate, and leverage large-scale, high-quality, proprietary datasets will have a decisive competitive advantage.

- Navigating Challenges is Key to Success: The main barriers to adoption are the “three I’s”: inadequate Infrastructure and data quality, the Interpretability problem of “black box” models, and the evolving Institutional and regulatory landscape, particularly from the FDA.

- The Future is Autonomous and Personalized: The next frontier will see the convergence of AI with self-driving labs and quantum computing, enabling a future of hyper-personalized medicine where therapies can be designed and developed for individual patients at scale.

Frequently Asked Questions (FAQ)

1. Is AI more likely to help discover small molecules or large biologics?

While much of the initial success and media attention has focused on AI’s application in small molecule discovery, its impact on biologics is growing rapidly and is arguably just as profound. For small molecules, AI excels at de novo design and predicting ADMET properties. For large molecules like antibodies and proteins, AI is transformative in different ways. Deep learning models like AlphaFold are revolutionizing protein structure prediction, which is fundamental to designing biologics. Furthermore, generative AI is now being used to design novel antibodies and peptides from scratch, as demonstrated by AbSci’s recent milestones. The core AI capabilities—pattern recognition, prediction, and generation—are modality-agnostic, and we are seeing specialized platforms emerge for both classes of drugs.

2. What is the single biggest non-technical barrier to AI adoption in a large pharma company?

The single biggest non-technical barrier is organizational culture and inertia. Pharmaceutical companies are traditionally siloed organizations with deeply entrenched workflows built around experimental science. Successfully integrating AI requires a fundamental cultural shift towards data-centricity and cross-functional collaboration. It necessitates breaking down the walls between biologists, chemists, data scientists, and IT professionals. There can be cultural resistance from experimental scientists who may be skeptical of computational approaches, and a shortage of interdisciplinary talent who can speak both the language of biology and machine learning.3 Overcoming this requires strong, top-down executive leadership that champions the new vision and invests in training and new organizational structures.

3. How can a company protect its intellectual property when using third-party AI platforms and datasets?

This is a critical and complex legal challenge. The best practice is to establish crystal-clear data ownership, usage rights, and IP licensing terms in contractual agreements before any collaboration begins. Key strategies include:

- Delineating Background vs. Foreground IP: Clearly define what data and algorithms each party brings to the collaboration (background IP) and establish who owns the inventions that result from the collaboration (foreground IP).

- Hybrid IP Strategy: Protect core, proprietary AI algorithms and models as trade secrets, while filing patents on the novel drug candidates they produce. This avoids having to disclose the “secret sauce” of the AI platform in a patent application.

- Data Provenance: Use robust data governance and even technologies like blockchain to maintain a clear, auditable trail of where data came from and how it was used to train models, which can be crucial in resolving attribution disputes.

4. Will AI replace medicinal chemists and biologists?

No, AI is not expected to replace human scientists. Instead, it will augment their capabilities and change their roles. AI is a powerful tool for generating hypotheses, analyzing data, and handling complexity at a scale that humans cannot. However, it still requires human expertise to define the right scientific questions, interpret the results in a biological context, make strategic decisions, and design the critical experiments needed for validation. The future R&D professional will be a “human-in-the-loop,” collaborating with AI systems. The role of a medicinal chemist may shift from manual, iterative design to overseeing and guiding generative AI models, while a biologist’s role may focus more on designing sophisticated experiments to validate AI-generated hypotheses in complex biological systems.99

5. What is a realistic timeline for a mid-sized pharma company to build a meaningful AI capability?

Building a meaningful, integrated AI capability from scratch is a multi-year endeavor. A realistic timeline can be broken into phases:

- Phase 1 (Year 1): Foundational Setup. This involves establishing a clear AI strategy, securing executive buy-in, and making initial investments in data infrastructure. The focus is on data governance: breaking down silos, standardizing data formats, and creating a centralized data lake. This phase also includes hiring a core team of data scientists and computational biologists.

- Phase 2 (Years 2-3): Pilot and Validate. The team would start with well-defined pilot projects in specific areas, such as building an ADMET prediction model or using an NLP tool for literature review. This demonstrates early wins, builds organizational trust, and helps refine the broader strategy. This is also the time to evaluate and form strategic partnerships with external AI companies.

- Phase 3 (Years 4-5+): Scale and Integrate. Based on the success of pilot projects, the company can begin to scale its capabilities, investing in more advanced platforms (e.g., for generative chemistry) and integrating AI tools across the R&D workflow. This involves a significant investment in both technology and talent, with the goal of creating a fully integrated, learning R&D ecosystem.

It is a marathon, not a sprint, and success depends more on a sustained, strategic commitment than on a single, large investment.

References

- Perspective on the challenges and opportunities of accelerating drug discovery with artificial intelligence – Frontiers, accessed August 3, 2025, https://www.frontiersin.org/journals/bioinformatics/articles/10.3389/fbinf.2023.1121591/full

- AI-driven innovations in pharmaceuticals: optimizing drug discovery …, accessed August 3, 2025, https://pubs.rsc.org/en/content/articlehtml/2025/pm/d4pm00323c

- Artificial Intelligence in Health Care: Benefits and Challenges of Machine Learning in Drug Development [Reissued with revisions on Jan. 31, 2020.] – GAO, accessed August 3, 2025, https://www.gao.gov/products/gao-20-215sp

- Maximizing ROI on Drug Development by Monitoring Competitive …, accessed August 3, 2025, https://www.drugpatentwatch.com/blog/maximizing-roi-on-drug-development-by-monitoring-competitive-patent-portfolios/

- Stages of Drug Development – Friedreich’s Ataxia Research Alliance, accessed August 3, 2025, https://www.curefa.org/stages-of-drug-development/

- (PDF) Machine Learning Applications in Drug Discovery – ResearchGate, accessed August 3, 2025, https://www.researchgate.net/publication/384376275_Machine_Learning_Applications_in_Drug_Discovery

- Applications of machine learning in drug discovery and development – PMC, accessed August 3, 2025, https://pmc.ncbi.nlm.nih.gov/articles/PMC6552674/

- Why Big Pharma is Quietly Using AI: Industry Insights for 2025 – PharmaDiversity Blog, accessed August 3, 2025, https://blog.pharmadiversityjobboard.com/?p=408

- The Drug Development Process | FDA, accessed August 3, 2025, https://www.fda.gov/patients/learn-about-drug-and-device-approvals/drug-development-process

- 8 Applications of Machine Learning in The Pharmaceutical Industry …, accessed August 3, 2025, https://www.drugpatentwatch.com/blog/8-applications-machine-learning-pharmaceutical-industry/

- Machine Learning Techniques Revolutionizing Target Identification …, accessed August 3, 2025, https://dev.to/clairlabs/machine-learning-techniques-revolutionizing-target-identification-in-drug-discovery-1h8c

- How does AI assist in target identification and validation in drug …, accessed August 3, 2025, https://synapse.patsnap.com/article/how-does-ai-assist-in-target-identification-and-validation-in-drug-development

- AI In Action: Redefining Drug Discovery and Development – PMC, accessed August 3, 2025, https://pmc.ncbi.nlm.nih.gov/articles/PMC11800368/