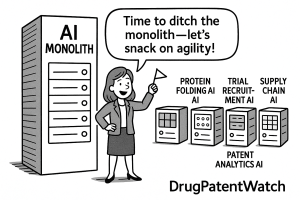

Introduction: The Unbearable Weight of the AI Monolith

For decades, the engine of innovation has been sputtering against a formidable headwind known as Eroom’s Law—Moore’s Law in reverse. Instead of becoming exponentially cheaper and faster, the process of bringing a new drug to market has become crushingly expensive and slow. The numbers paint a stark picture: a timeline stretching over a decade, costs soaring past $2.6 billion per approved therapy, and a staggering failure rate where over 90% of new molecular entities never reach the patients who need them.1 This is not a sustainable trajectory. The pressure from patent cliffs, pricing regulations, and the sheer complexity of modern biology demands a radical reinvention of how we operate.

In this high-stakes environment, Artificial Intelligence has been heralded as our salvation. The promise was, and still is, immense: AI would decode complex diseases, design novel molecules at lightning speed, and optimize every facet of the value chain. In the rush to capture this promise, many organizations invested in what I call the “AI Monolith”—massive, all-encompassing platforms designed to be the single source of truth, the one system to rule them all. The allure was understandable; a single, integrated solution felt powerful and comprehensive.

Yet, this monolithic dream has, for many, become an operational nightmare. These colossal systems, built on a single, unified codebase, are incredibly difficult to change. They are rigid, slow to adapt, and create a powerful “technology adoption barrier”. In a field where a new generative model or a breakthrough algorithm is announced every few months, being locked into a technology stack from five years ago is a death sentence for innovation. The very tool meant to accelerate progress has become an anchor, weighing down the organization with its own complexity and inertia.

So, how do we break free? The answer lies in a fundamental paradigm shift. We must move away from the heavy, multi-course meal of the AI monolith and embrace a more agile, flexible, and potent strategy. I call it “Snackable AI.”

This isn’t just a catchy phrase; it’s a strategic and architectural pivot towards a decentralized ecosystem of smaller, specialized, and independently deployable AI modules, built on a microservices architecture. Instead of one giant, inflexible system, imagine a curated menu of high-impact AI “snacks”—a service that excels at predicting protein folding, another that masters patient recruitment for clinical trials, a third dedicated to supply chain forecasting. These modules are lightweight, interchangeable, and can be combined in novel ways to solve specific business problems with speed and precision.

This report will make the case that to survive and thrive, the pharmaceutical industry must dismantle its monolithic AI ambitions and embrace this more agile, modular, and “snackable” approach. We will demonstrate that this is the key to accelerating innovation, driving unprecedented efficiency, and building a sustainable competitive advantage for the 21st century. This is not merely a technological upgrade; it is a necessary economic response to the existential threat of Eroom’s Law. The business pain is the cause; this architectural shift is the effect.

The Architectural Divide: Monolithic Giants vs. Agile Microservices

To truly grasp the strategic imperative of “Snackable AI,” we first need to understand the fundamental technological choice at its core: the battle between monolithic and microservices architectures. This isn’t just a debate for your IT department; it’s a C-suite decision that will profoundly shape your company’s agility, culture, and competitive posture for years to come.

Deconstructing the Monolith: The All-in-One Powerhouse and Its Flaws

Imagine your entire R&D and operational software platform as a single, massive, tightly-woven factory. Every production line—from early-stage discovery to clinical data analysis and supply chain management—is physically and logically connected. This is the essence of a monolithic architecture: a single, unified application built on one enormous codebase.4

Initially, this approach has its merits. For a small startup or a pilot project, it can be simpler to develop and deploy. With just one executable file to manage, end-to-end testing can feel more straightforward, and debugging is easier since all the code resides in one place. It’s a compelling model when you’re small and speed is paramount.

However, as a pharmaceutical organization scales, this simplicity curdles into crippling complexity. The monolithic factory, once a symbol of integrated power, becomes a prison of its own design. For a complex, global R&D organization, this model is a dead end for several critical reasons:

- Scalability Failure: In a monolith, you cannot scale individual components. Let’s say your in silico molecule screening simulations are experiencing a massive surge in demand. To provide more computational power to that single function, you must deploy and run another entire copy of the massive application. You are forced to scale the reporting dashboards, the regulatory submission module, and every other component just to support one bottleneck. This is grotesquely inefficient and prohibitively expensive.

- Lack of Resilience: The tightly coupled nature of the monolith creates a single, massive point of failure. An error in a seemingly non-critical module—a bug in the code that generates quarterly reports, for instance—can crash the entire platform, bringing your mission-critical drug discovery and clinical trial operations to a grinding halt.4 This fragility is unacceptable when patient lives and billion-dollar programs are on the line.

- Technology Lock-In: This is perhaps the most dangerous flaw in the fast-moving world of AI. A monolithic platform is fundamentally constrained by the technologies and programming languages chosen at its inception. Imagine your platform was built five years ago. Today, a revolutionary generative AI model for designing large molecules is released. Integrating this new tool isn’t a simple upgrade; it could require a complete, multi-year, and astronomically expensive rewrite of your entire platform. This creates an insurmountable barrier to innovation, leaving you watching from the sidelines as more agile competitors race ahead.

The “Snackable” Revolution: A World of Specialized, Interconnected Services

Now, let’s imagine a different model. Instead of one giant factory, picture a modern industrial park filled with dozens of smaller, highly specialized workshops. One workshop excels at protein analysis, another at logistics optimization, and a third at analyzing clinical trial data. Each workshop is independent, can be upgraded or even completely replaced with a newer, better one without disrupting the others, and they all communicate through a standardized, efficient logistics network of roads and shipping lanes.

This is the “Snackable AI” world, built on a microservices architecture. It’s an approach that structures an application as a collection of loosely coupled, independently deployable services, each with its own specific business logic and database.4 This architectural choice unlocks a cascade of strategic advantages perfectly suited to the demands of the modern pharmaceutical industry.

- Targeted Scalability and Cost-Efficiency: With microservices, you scale only what you need. When your genomic data analysis service is under heavy load during a critical research phase, you can dynamically allocate more cloud resources just to that service. When the load subsides, you scale it back down. This “pay-for-what-you-use” model is vastly more cost-effective and allows you to direct resources with surgical precision.

- Enhanced Fault Isolation and Resilience: The independence of services creates a highly resilient system. If the microservice responsible for matching patients to clinical trials experiences a failure, it does not impact the functionality of the supply chain forecasting service or the de novo molecule design tools. The issue is contained, allowing the rest of the business to continue operating without interruption. This fault isolation is essential for ensuring business continuity in mission-critical operations.

- Technological Freedom and Agility: This is the game-changer. A microservices architecture liberates your teams from technology lock-in. The team working on protein folding can use a Python-based service that leverages Google’s AlphaFold. The team focused on generative chemistry can build a service in a different language using NVIDIA’s BioNeMo framework. The clinical analytics team can use a third set of tools best suited for real-world data analysis. All these specialized services can coexist and collaborate within the same ecosystem, communicating via lightweight APIs.5 This fosters a culture of using the best tool for the job and enables the rapid adoption of cutting-edge AI breakthroughs as they happen.

A Strategic Scorecard for Pharma Leaders

For leaders tasked with making multi-million dollar technology bets, translating these architectural concepts into tangible business outcomes is paramount. The following scorecard provides a clear, at-a-glance comparison to guide your strategic decision-making.

| Strategic Dimension | Monolithic Approach | Snackable (Microservices) Approach | Implication for Pharma Leaders |

| Speed of Innovation | Slow, high-risk, system-wide updates. | Rapid, independent, low-risk updates to individual services. | Your ability to respond to and capitalize on new scientific and technological breakthroughs. |

| Scalability & Cost-Efficiency | Must scale the entire, bloated system to meet demand for one function. | Scale only the specific components under load. | A “pay-for-what-you-use” compute model that avoids massive waste and optimizes operational costs. |

| Risk & Resilience | A single error can bring down the entire mission-critical platform. | Failures are isolated to individual services, protecting the whole. | Ensuring business continuity in R&D, manufacturing, and supply chain operations. |

| Talent & Team Structure | Requires large, slow-moving teams with complex interdependencies. | Enables small, autonomous, domain-focused teams with clear ownership. | The power to attract and retain top tech talent who thrive on autonomy and direct impact. |

| Future-Proofing | Constrained by the technologies chosen at inception (technology lock-in). | Flexibility to adopt the best new AI model or language for each specific task. | The ability to integrate next-generation AI without a complete, multi-year overhaul of your entire system. |

It’s crucial to recognize that this architectural choice is not merely a technical decision; it is a direct intervention in your corporate culture. A monolithic system, by its very nature, encourages a centralized, top-down, and often bureaucratic approach to technology. Decisions are slow, dependencies are numerous, and responsibility is diffused across large, lumbering teams.

In stark contrast, a microservices architecture is a catalyst for an agile culture. It empowers small, autonomous, domain-focused teams—often just five to ten people—to take complete ownership of a specific business capability, from development to deployment and maintenance.4 This model of ownership and accountability is a core tenet of modern agile methodologies and is intensely motivating for the world-class tech talent you need to attract. Therefore, when you choose to adopt “Snackable AI,” you are not just buying a new platform; you are investing in a new, faster, and more innovative way of working.

Deconstructing the Value Chain: Snackable AI in Action

The true power of the “Snackable AI” paradigm becomes evident when we see it applied across the pharmaceutical value chain. This is not a theoretical exercise; it’s a practical strategy for dismantling complexity and injecting speed and intelligence into every stage, from the earliest moments of discovery to the final delivery of medicine to a patient. Let’s walk through how this modular approach is revolutionizing the industry’s core functions.

Reimagining R&D: From Slow Discovery to Autonomous Science

The traditional R&D process is a long, arduous, and failure-prone journey. “Snackable AI” transforms it into a faster, more targeted, and more predictive endeavor by breaking down monumental challenges into manageable, AI-powered tasks.

Agentic AI for Preclinical Research: Your New Autonomous Lab Partner

The most exciting frontier in R&D is the rise of “agentic AI.” Think of an AI agent not as a single model, but as an intelligent orchestrator that can use a collection of specialized microservices—or “tools”—to perform complex, multi-step scientific tasks autonomously.

We are already seeing this in action. Researchers have developed modular frameworks where a large language model (LLM) acts as the “brain,” directing a suite of specialized tools to execute an entire early-stage discovery pipeline. This agent can autonomously:

- Retrieve Data: Call a microservice to pull relevant biomedical data, like FASTA sequences or SMILES representations of molecules from public and private databases.

- Generate Molecules: Use a generative chemistry service to create a diverse set of novel seed molecules.

- Predict Properties: Send these molecules to a suite of ADMET prediction services to forecast their drug-like properties.

- Refine and Optimize: Iteratively refine the molecules based on the prediction results to improve their viability.

- Generate Structures: Employ a 3D modeling service to generate protein-ligand complex structures and estimate binding affinity.

This is the “Snackable AI” philosophy in its purest form: a collection of independent, expert services working in concert under the direction of an intelligent agent. Platforms like Receptor.AI are commercializing this exact concept, offering a unified 4-level architecture that is explicitly modular. It uses an Agentic AI for high-level strategy, which then assembles custom drug discovery workflows from a stack of dozens of predictive and generative AI models, all fed by a comprehensive data engine. This modularity is so granular that they offer distinct platforms for different modalities like small molecules, peptides, and proximity inducers, each using a tailored set of “snackable” AI tools.

Generative Chemistry and De Novo Design as a Service

Beyond orchestrating workflows, specific “snackable” generative AI models are revolutionizing the creative heart of drug discovery: de novo molecule design. Instead of building these incredibly complex models from scratch, companies can now consume them as a service.

A prime example is NVIDIA’s BioNeMo framework. It provides a library of pretrained, state-of-the-art models and enterprise-grade microservices (known as NIMs) that are specifically tailored for the drug discovery pipeline. Your R&D team can simply call a BioNeMo microservice to perform a specific task, like generating a novel molecule with certain desired properties or predicting its interaction with a target protein. This democratizes access to world-class AI, allowing even smaller biotechs to leverage the same power as the industry giants.

The results of this targeted, modular approach are staggering. By focusing specialized AI on the right problems, companies are achieving dramatic acceleration. Insilico Medicine, a pioneer in this space, used its generative AI platform to take a novel drug for idiopathic pulmonary fibrosis from target discovery to a Phase 1 clinical trial candidate in under 18 months—a fraction of the traditional timeline.9 Similarly, a landmark collaboration between

BenevolentAI and AstraZeneca deployed an AI agent to rapidly identify potential treatments for chronic kidney disease, reducing the discovery time by an incredible 70%. These aren’t incremental improvements; they are fundamental shifts in the speed of science.

The Hyper-Efficient Clinical Trial: Precision, Speed, and Patient-Centricity

Clinical trials are notoriously the most expensive and time-consuming phase of drug development. They are ripe for disruption by a portfolio of targeted, “snackable” AI solutions that can optimize every step of the process.

Microservices for Intelligent Trial Design and Site Selection

Long before the first patient is enrolled, critical decisions are made in protocol design that have massive downstream consequences. A dedicated analytics microservice can ingest vast amounts of real-world data (RWD) from electronic health records, claims data, and past trial performance to help you design smarter, more efficient protocols. These services can identify overly complex procedures, reduce unnecessary patient burden, and predict potential recruitment bottlenecks. The ROI is immediate and substantial: avoiding just a single protocol amendment can save a company upwards of $535,000 and three months of delay.

Perhaps the most potent “snack” in this domain is AI-powered site selection. Identifying the right clinical trial sites is a notoriously difficult task, with 10% to 30% of activated sites ultimately failing to enroll a single patient. This is a colossal waste of time and money.

A McKinsey analysis of biopharma data found that AI-enabled site selection drives superior trial outcomes by identifying and predicting top-enrolling sites. This AI-driven approach improves the identification of these crucial sites by 30 to 50 percent and accelerates overall patient enrollment by 10 to 15 percent, or more, across therapeutic areas.

This is a clear, quantifiable return on investment delivered by a single, specialized AI application—a perfect example of a high-value “snack.”

AI-Powered Patient Recruitment as a Specialized Tool

Finding the right patients for a trial is another major bottleneck. The traditional process of manually sifting through patient records is slow and error-prone. Here again, specialized AI services are transforming the process. Researchers at the NIH developed an AI tool called TrialGPT, which acts as a dedicated service to analyze patient information and match it against clinical trial databases. In initial tests, it helped providers spend 40% less time screening patients while maintaining the same level of accuracy.

Modern LLM-based microservices are particularly powerful because, unlike older keyword-based search tools, they can understand the nuance and context of unstructured clinical terminology found in doctors’ notes and patient records. This leads to more inclusive and efficient patient-trial matching, accelerating the entire clinical evidence generation process.

The Rise of IoT and Real-Time Monitoring Services

The proliferation of wearable sensors and remote monitoring devices—the Internet of Things (IoT)—is creating a tidal wave of real-time patient data. This data is a goldmine, but only if it can be analyzed effectively. This is where specialized AI microservices come in.

A dedicated service can be designed to ingest data streams from pedometers, blood oxygen monitors, or smart pillboxes.15 This service can then monitor for early signs of adverse events, track patient adherence to the protocol in real time, and provide continuous feedback to clinical teams. Companies like

AiCure are already using AI-powered computer vision via a patient’s smartphone to confirm medication adherence, providing a reliable data stream that improves the quality and integrity of trial data. This real-time insight is also a key enabler for adaptive trial designs, where protocols can be adjusted on the fly based on incoming data, making trials more flexible, efficient, and ethical.

The Sentient Supply Chain: From Factory Floor to Patient Door

The pharmaceutical supply chain is a massively complex global network where efficiency, quality, and compliance are paramount. Attempting to manage this with a single monolithic system is a recipe for disaster. A “snackable” approach, however, allows companies to deploy a toolkit of targeted AI solutions to optimize specific, high-value problems.

A Toolkit of AI for Intelligent Manufacturing

Instead of a single, all-encompassing “smart factory” platform, leading companies are deploying a portfolio of specialized AI microservices to tackle distinct manufacturing challenges. These “snacks” include:

- AI for Job Shop Scheduling: A dedicated service that analyzes work orders, production lines, and complex interdependencies to create optimized schedules that minimize costly changeover times and enable just-in-time production. Cipla India leveraged this approach to reduce their changeover duration by a remarkable 22%.

- AI for Predictive Maintenance: A service that analyzes real-time data from machine sensors—vibration, heat, sound—to predict equipment failures before they happen. This drastically reduces unplanned downtime, which is a major source of cost and disruption in pharma manufacturing.9

- AI for Quality Control: A computer vision-based microservice that can be deployed on the production line to identify scraps, defects, or units requiring rework in real-time. This boosts labor productivity by freeing up staff for more value-added tasks. Agilent Technologies in Singapore used AI-powered inspections to improve labor productivity by 31%.

Agentic AI in Logistics and Demand Forecasting

The principles of agentic AI are also being applied to the broader supply chain. Shabbir Dahod, CEO of TraceLink, envisions a future where agentic AI automates routine supply management activities. Imagine an AI agent—a small, dedicated microservice—that spends its day monitoring order statuses, verifying lead times with suppliers, and automatically flagging exceptions or potential delays for a human manager to review. This automates the tedious, manual work, allowing supply chain professionals to focus on strategic problem-solving.

Of course, as Dahod wisely cautions, “AI is only as effective as the extent to which you are digitalized in your end-to-end supply chain”. A modular AI strategy must be built on a foundation of clean, accessible digital data.

This approach is already bearing fruit in demand forecasting. Companies like Johnson & Johnson and Novartis are using specialized AI models to analyze historical sales data, market trends, and even geopolitical factors to predict demand with far greater accuracy than traditional methods.9 This reduces costly stockouts of critical medicines and minimizes waste from overstocking, directly impacting the bottom line.

This modular, “portfolio” approach to AI implementation offers a profound strategic advantage. Instead of making one massive, high-risk, all-or-nothing bet on a single monolithic platform, you can make dozens of smaller, lower-risk bets on specialized microservices. This allows you to rapidly test new ideas, measure the performance of each “snack” with targeted, business-centric KPIs—like reduction in machine downtime or improvement in forecast accuracy—and make dynamic investment decisions. You can quickly cut your losses on underperforming models and double down on those that deliver clear, measurable ROI. This level of financial and strategic agility is simply impossible to achieve with an intertwined, monolithic system where the value of individual components is opaque.

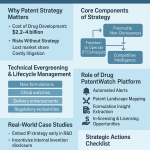

The Intelligence Edge: Turning Patent Data into Competitive Fuel

In the hyper-competitive landscape of the pharmaceutical industry, information is power. The ability to understand your competitors’ strategies, anticipate market shifts, and protect your own intellectual property is not just an advantage; it’s a prerequisite for survival. Yet, companies today are drowning in a sea of IP data. The sheer volume of global patent filings, clinical trial registrations, regulatory documents, and scientific literature has grown so immense that manual analysis is no longer feasible. AI is the only way to navigate this deluge and extract timely, strategic insights.20

Deploying “Snackable AI” for Patent Analytics and Competitive Intelligence

A monolithic “Competitive Intelligence AI” is the wrong approach. It would be too slow and too generic to provide the specific, high-value insights needed to make critical decisions. A “snackable” strategy, however, allows you to deploy a fleet of specialized AI microservices, each designed to perform a specific intelligence task with surgical precision and efficiency. Imagine creating a dedicated intelligence toolkit with services like:

- The “White Space” Analyst: An AI model trained to continuously scan the global patent landscape, using natural language processing to understand the claims and technologies being protected. Its sole job is to identify technological gaps and therapeutic areas that are underexplored or not fully exploited by your competitors, flagging potential new R&D opportunities for your strategy team.

- The “Infringement” Sentinel: A real-time monitoring service that is constantly scanning new patent filings and publications. When it detects a new patent that may infringe upon your existing IP portfolio, it automatically alerts your legal team with a detailed report, allowing for proactive intervention instead of reactive litigation.1

- The “Competitor Strategy” Mapper: A sophisticated NLP-based service that doesn’t just look at patents in isolation. It correlates a competitor’s patent filings with their clinical trial registrations, their scientific publications, and even their hiring patterns for key scientists. By connecting these disparate dots, this service can map out a competitor’s likely R&D trajectory, giving you an invaluable early warning of their strategic shifts.21

The Symbiotic Engine: Integrating AI with Data Platforms like DrugPatentWatch

These “snackable” AI tools are powerful, but they are nothing without high-quality fuel. An AI model is only as good as the data it’s trained on, and in the world of pharmaceutical IP, the quality, comprehensiveness, and structure of that data are paramount. This is where a platform like DrugPatentWatch becomes an indispensable strategic asset.

It’s a mistake to view DrugPatentWatch as just another database. Instead, you should see it as the foundational, structured data layer that powers your entire “snackable” intelligence engine.23 It provides the clean, reliable, and integrated data on patents, litigation, clinical trials, API suppliers, and crucial patent expiration dates that your AI models need to function effectively.23

Let’s walk through a concrete, real-world example of how this symbiotic relationship creates a powerful competitive advantage:

- The Data Foundation: Your company subscribes to the DrugPatentWatch Ultimate Plan, gaining API access to its rich, real-time data feeds, including detailed information on patent applications and, critically, US drug patent litigation like inter partes review (IPR) filings.23

- The “Snackable” Intelligence Layer: Your internal AI team develops a small, highly specialized AI microservice. Its one and only job is to monitor the DrugPatentWatch data feed for any new IPRs filed against key patents held by your top three competitors in a specific therapeutic area.

- Real-Time Strategic Insight: A challenger files an IPR against your main competitor’s blockbuster drug. Within minutes, your “IPR Alert” microservice is triggered. It automatically ingests the filing details from DrugPatentWatch and then performs a rapid, multi-factor analysis. It assesses the litigation history of the challenger, analyzes the specific claims being challenged in the patent, and cross-references recent decisions from the Patent Trial and Appeal Board (PTAB) in similar cases.

- Actionable Intelligence: The microservice generates a concise, two-page summary report with a “Threat Score” and a “Probability of Success” estimate. This report lands in the inboxes of your CEO, Chief Strategy Officer, and Head of Business Development before your competitor’s own legal team has likely even finished their first internal meeting on the topic.

This is the power of the “snackable” approach. A small, focused tool, fed by a world-class data source, can deliver strategic foresight that is impossible to achieve through manual methods. It transforms your organization from being reactive to being proactive, allowing you to capitalize on market disruptions while your competitors are still trying to figure out what happened.

This model points toward the future of competitive intelligence in the pharmaceutical industry. The goal is not to build a single, all-knowing “CI AI.” Rather, the winning strategy is to create a distributed and resilient intelligence network—an ecosystem of specialized AI agents. One agent might be subscribed to the DrugPatentWatch feed for IP intelligence, another to a clinical trial database for R&D updates, and a third to financial news APIs to monitor for M&A signals. Each “snackable” agent is an expert in its domain, optimized for its specific data source and analytical task. When orchestrated effectively, this collection of loosely coupled, specialized services creates an intelligence capability that is far more powerful, adaptable, and insightful than any single, monolithic system could ever hope to be.

Navigating the Labyrinth: Risks, Regulations, and Governance in a Modular World

Embracing a “Snackable AI” strategy is not without its challenges. While a microservices architecture makes complexity more visible and manageable, it does not eliminate it. The complexity simply shifts—from the tangled internals of a single application to the intricate network of interactions between dozens, or even hundreds, of independent services. Navigating this new landscape requires a deliberate and sophisticated approach to governance, risk management, and regulatory compliance.

The Fragmentation Dilemma: The Hidden Costs of Agility

The very modularity that gives “Snackable AI” its power also introduces a significant risk: fragmentation. In a monolithic system, all data typically resides in a single, centralized database. In a microservices ecosystem, each service often has its own dedicated database, optimized for its specific needs.4

This can lead to data silos on a massive scale. Patient data, research findings, and manufacturing logs can become fragmented across numerous independent services, making it incredibly difficult to get a holistic, end-to-end view of a process or a patient journey. This fragmentation increases the risk of data inconsistencies, complicates comprehensive analytics, and can create significant hurdles for regulatory reporting and audits.27 Without a strong data governance strategy from the outset, your agile ecosystem can devolve into a chaotic and disconnected collection of data islands.

The Unseen Threat: How “Snackable AI” Can Amplify Algorithmic Bias

One of the most critical ethical and operational risks in AI is algorithmic bias. AI models learn from the data they are trained on, and if that data reflects existing societal or historical biases, the model will learn, codify, and amplify those biases at scale.27 A now-famous 2019 study published in

Science revealed a commercial algorithm used in US hospitals was significantly biased against Black patients because it used healthcare costs—a metric influenced by socioeconomic factors—as a proxy for medical need.

This risk is dangerously magnified in a decentralized, “snackable” AI development model. If you have multiple, autonomous teams independently building and deploying their own AI microservices, how do you ensure that every single one of them is adhering to rigorous standards for data representation, fairness audits, and bias mitigation? A monolithic system, for all its flaws, at least provides a single, centralized chokepoint where these critical ethical checks can be enforced. In a modular world, a biased model for patient recruitment developed by one team could slip through the cracks, leading to discriminatory trial enrollment and potentially invalidating a multi-billion dollar clinical program.

The only effective countermeasure is to pair a decentralized development model with a strong, centralized AI governance body. This could be an AI Center of Excellence or an AI Ethics Committee, tasked with setting universal standards, providing standardized tools for bias detection, and auditing every “snackable” service before it is deployed into a production environment.17

The Regulatory Tightrope: Navigating FDA, EMA, and Global Data Privacy

The pharmaceutical industry operates within a complex and ever-evolving web of regulations. Any AI system, “snackable” or not, must comply with a host of rules, from data privacy laws like HIPAA in the U.S. and GDPR in Europe to the specific guidelines for AI and machine learning being developed by regulatory bodies like the FDA and the European Medicines Agency (EMA).9

Key themes are emerging from regulatory guidance. Agencies are emphasizing a risk-based approach, where the level of scrutiny applied to an AI model is proportional to its potential impact on patient safety.32 They are demanding greater model transparency and explainability, pushing back against “black box” algorithms whose decision-making processes are opaque.27 And they are requiring robust documentation of data integrity, model traceability, and the mechanisms for human oversight. Meeting these requirements for a single monolithic system is challenging enough; ensuring compliance across a distributed ecosystem of hundreds of independently developed microservices is a monumental governance task.

A Framework for Trust: Actionable Strategies for Risk Mitigation

To thrive in a modular world, you need a proactive and comprehensive risk management framework. The framework developed by the U.S. National Institute of Standards and Technology (NIST) provides an excellent, widely accepted model based on four key functions: Govern, Map, Measure, and Manage. Applying this framework, we can define a set of actionable strategies specifically tailored to the risks of a “Snackable AI” ecosystem.

- Govern: The foundation of trust is a strong governance structure. This means establishing a cross-functional AI Ethics Committee with representatives from legal, regulatory, data science, and clinical departments. It also means creating a well-resourced AI Center of Excellence (CoE) to serve as the central hub for standards, best practices, and oversight.17

- Map & Measure: You cannot manage risks you cannot see. This requires proactively mapping out potential vulnerabilities. For data security, this means implementing robust encryption for data both at rest and in transit, exploring advanced techniques like differential privacy, and enforcing the principle of least privilege for data access.36 For model security, this involves techniques like adversarial training, where you intentionally attack your own models with manipulated data to make them more resilient to real-world threats like data poisoning or malicious prompt injection.35

- Manage: This is about implementing the controls and processes to mitigate identified risks. A key element is enforcing transparency. This can be achieved by advocating for the use of inherently interpretable models where possible, and implementing post-hoc explainability techniques (like SHAP or LIME) for more complex models. This ability to explain why a model made a particular recommendation is crucial for building trust with clinicians, patients, and regulators.

The following table outlines a practical framework for addressing the unique risks of a modular AI strategy:

| Risk Category | Description in a Modular Context | Key Mitigation Strategy | Responsible Function |

| Data Governance Failure | Inconsistent data standards, definitions, and quality across dozens of independent microservice databases. | Establish a centralized Data Governance Council and implement a federated data catalog to create a unified view of data assets. | Chief Data Officer / CoE |

| Amplified Algorithmic Bias | A biased model developed by one team goes undetected and is integrated into critical workflows, causing discriminatory outcomes. | Mandate bias audits using standardized, CoE-provided tools as a non-negotiable step in the CI/CD pipeline before any service can be deployed. | AI Ethics Committee / CoE |

| Cascading Security Breaches | A security breach in one low-security, non-critical service provides a gateway for attackers to move laterally and compromise mission-critical systems. | Implement a “zero-trust” security architecture where no service is trusted by default, and enforce strict authentication and authorization via API gateways. | CISO / IT Security |

| Regulatory Compliance Gaps | Individual, decentralized teams are unaware of or fail to correctly implement evolving FDA/EMA guidelines for AI model validation and documentation. | Create a centralized regulatory intelligence function within the CoE to interpret, disseminate, and enforce compliance standards across all development teams. | Regulatory Affairs / Legal |

Building the Future, One Bite at a Time: A Roadmap for Adoption

Transitioning from a monolithic past to a “snackable” future is a significant undertaking. It is not merely a technology project but a profound organizational transformation. A successful transition requires a clear roadmap, the right tools, and a deliberate focus on fostering an agile culture.

The First Bite: Mapping Your Migration from Monolith to Microservices

The single biggest mistake you can make is attempting a “big bang” migration—trying to rewrite your entire monolithic platform into microservices all at once. This approach is incredibly risky, expensive, and almost certain to fail. A far more strategic and pragmatic path is a phased, incremental migration.

We can learn valuable lessons from the technology company Atlassian, which successfully migrated its own massive platform from a monolith to microservices. Their journey highlights several best practices that are directly applicable to the pharmaceutical industry :

- Map Out Your Migration Strategy First: Before writing a single line of new code, dedicate significant time to planning the sequence of the migration. Identify the different functional domains within your monolith (e.g., target identification, clinical data management, supply chain logistics) and decide which ones to “carve out” first. Prioritize based on a combination of business impact and technical feasibility.

- Build Foundational Tooling Before You Migrate: Atlassian didn’t start by moving services; they started by building the tools they would need to manage the new ecosystem. The most critical tool they built was an internal service catalog called “Microscope,” which served as a central registry to track every single microservice, its owner, its dependencies, and its health status. For a pharma company, this is non-negotiable. You must have a system of record to avoid your new ecosystem descending into chaos.

- Automate Everything You Can: The migration process itself should be as automated as possible. This includes building automated checks for code quality, security, and reliability before any new service can be deployed to production.

- Start with a Single, High-Impact Slice: The best way to begin is to identify a single, high-impact, and relatively isolated function of your existing monolith. “Carve out” this function and rebuild it as your very first microservice. This first “bite” allows you to learn, refine your processes, and, most importantly, demonstrate tangible value to the business. This early win builds the momentum and political capital needed to fund the rest of the journey.

Assembling Your Toolkit: The Technology Stack for a Snackable Ecosystem

While the strategy is paramount, you also need the right technology stack to bring your “snackable” ecosystem to life. For business leaders, it’s not essential to understand the intricate details of these tools, but it is crucial to understand their purpose and why they are necessary investments.

- Containerization (e.g., Docker): Think of this as creating standardized, sealed shipping containers for your software. Each AI microservice is packaged into its own self-contained “box” along with everything it needs to run. This ensures that the service will behave identically whether it’s on a developer’s laptop, a testing server, or in the production cloud environment.

- Orchestration (e.g., Kubernetes): If Docker creates the containers, Kubernetes is the global logistics system—the port authority, shipping fleet, and air traffic control—that manages them all at scale. It automates the deployment, scaling, and management of your containerized services, ensuring they run smoothly, recover automatically from failures, and can handle fluctuating workloads without manual intervention.

- API Gateways & Service Mesh (e.g., Kong, Istio): These form the secure and managed transportation network for your ecosystem. An API Gateway acts as the single “front door,” controlling how external users and applications access your services. A Service Mesh manages the complex web of communication between your internal services, handling things like secure routing, load balancing, and providing critical visibility into traffic flow.

- Monitoring & Logging (e.g., Prometheus, ELK Stack): This is the “central nervous system” of your ecosystem. In a world with hundreds of services, you need a centralized way to collect metrics, logs, and traces from every single one. These tools give your operational teams the real-time visibility they need to detect problems, diagnose failures, and understand the performance of the entire system.

Fostering an Agile Culture: It’s About People, Not Just Platforms

It cannot be overstated: a successful transition to “Snackable AI” is 20% technology and 80% people and process. You can have the best Kubernetes cluster in the world, but if your culture remains slow, siloed, and resistant to change, your transformation will fail.

This requires a deliberate and well-resourced change management program. You must proactively address the cultural resistance that will inevitably arise when you ask people to work in new ways. This means breaking down the traditional silos between R&D, IT, and commercial departments and fostering the creation of cross-functional, product-oriented teams.

You must also confront the skilled labor shortage head-on. The demand for professionals who can design, build, and operate these modern AI systems far outstrips the supply. A successful strategy requires a two-pronged approach:

- Targeted Hiring: Bring in key experts with deep experience in cloud-native technologies and microservices to seed your internal teams.

- Aggressive Upskilling: Invest heavily in training and developing your existing workforce. Your domain experts—your chemists, biologists, and clinicians—are your greatest asset. By pairing them with tech experts and providing them with access to low-code AI platforms, you can empower them to become “citizen data scientists.” These platforms allow domain experts to build and deploy simple AI models and analytics workflows without needing to write complex code, democratizing innovation and dramatically accelerating the pace of discovery.39

The Command Center: The Rise of the AI Center of Excellence (CoE)

How do you maintain control and ensure quality in a decentralized world? The answer is the AI Center of Excellence (CoE). The CoE is the essential governance and enablement layer that makes the “snackable” model viable at an enterprise scale. It doesn’t build everything itself; instead, it provides the “guardrails” that allow decentralized teams to innovate safely and effectively.

The CoE’s core responsibilities are not to be a bottleneck, but an accelerator. It is a cross-functional team responsible for :

- Identifying High-Impact Use Cases: Working with business units to identify and prioritize the most valuable opportunities for AI.

- Developing Scalable Deployment Strategies: Creating the “paved road”—the standardized infrastructure, tools, and CI/CD pipelines that make it easy for teams to build and deploy new microservices.

- Setting Standards and Best Practices: Defining the technical, ethical, and regulatory standards that all AI services must adhere to, including guidelines for data quality, model validation, and bias mitigation.

- Ensuring Seamless Integration: Managing the overall architecture of the ecosystem to ensure that new services can be integrated smoothly and securely.

By providing this centralized expertise and infrastructure, the CoE empowers your autonomous teams to move faster, while ensuring that their innovations are secure, compliant, and aligned with the company’s overall strategic goals.

The Horizon: What’s Next for Snackable AI in Pharma?

The shift to a modular, “snackable” AI architecture is not an end state; it is the beginning of a new era of agility and innovation. By building this flexible foundation, you are positioning your organization to capitalize on the next wave of technological breakthroughs that will define the future of medicine.

The Democratization of Innovation: AI for Everyone

The long-term impact of making AI tools more accessible and “snackable” is the true democratization of innovation. When AI is no longer the exclusive domain of a centralized, high-priesthood of data scientists, but is instead a set of accessible tools that can be used by experts across the organization, the potential for discovery explodes.

We are already seeing glimpses of this future. Consider the bold move by Moderna, which took a “digital-first, AI-focused” approach by rolling out OpenAI’s ChatGPT Enterprise to its entire workforce. They didn’t just give employees access; they actively encouraged them to build and share their own custom GPTs to solve problems within their specific domains. This led to a Cambrian explosion of internal innovation, with custom bots being created for everything from analyzing performance reviews to suggesting clinical trial dosing recommendations.

Of course, this radical democratization creates its own significant governance challenges—how do you manage the risks of inaccurate “hallucinations” or the misuse of custom bots in critical GxP processes? Moderna’s journey highlights the central tension of the future: balancing the incredible speed and innovation unlocked by widespread AI adoption with the need for robust governance and control. This trend is further accelerated by the open-sourcing of powerful, domain-specific models. When a company like Amgen makes the code, data, and model weights for its high-performance protein language model, AMPLIFY, publicly available, it democratizes access to state-of-the-art tools for the entire scientific community, enabling smaller biotechs and academic labs to build on their work.

Federated Learning: The Ultimate Snackable Collaboration

One of the most significant hurdles in healthcare AI is data access. Sensitive patient data and proprietary research data are locked away in institutional silos, protected by privacy regulations and competitive concerns. This data fragmentation severely limits the power of AI models, which thrive on large, diverse datasets.

Federated learning emerges as a revolutionary technology that is perfectly aligned with the decentralized, modular ethos of “Snackable AI.” It offers a way to train a single, powerful, global AI model across multiple organizations—such as a consortium of hospitals, research institutions, and pharmaceutical companies—without any of them ever having to share their raw, sensitive data.28

The concept is elegant: instead of bringing the data to a central model, the model travels to the data. The global model is sent to each participating institution, where it is trained locally on their private data. Only the updated model parameters—the mathematical learnings, not the data itself—are sent back to be aggregated into a new, improved global model. This process is repeated, allowing the model to learn from the collective knowledge of the entire network while ensuring that sensitive data never leaves the protection of its owner’s firewall. This is a powerful solution to the data privacy, security, and fragmentation challenges that have long plagued the industry, enabling a new level of collaboration that was previously impossible.

The Long-Term Vision: The Composable Pharma Enterprise

When we synthesize all these trends—a modular microservices architecture, the democratization of AI tools, and new collaborative models like federated learning—a powerful vision for the future of the pharmaceutical company emerges: the Composable Pharma Enterprise.

In this future, the entire organization’s operations are built not from rigid, siloed departments and monolithic software, but from a dynamic library of interchangeable, AI-powered business capabilities—the “snacks.” These are not just software modules; they are encapsulated business functions that can be rapidly discovered, combined, and reconfigured to meet new challenges and opportunities.

Imagine you need to launch a new drug for a rare genetic disease. Instead of a multi-year, linear process, a cross-functional team could rapidly assemble a custom, end-to-end workflow by plugging together pre-existing, validated microservices from an enterprise-wide catalog:

- A “Genomic Target Identification” service to pinpoint the precise patient population.

- A “Personalized Clinical Trial Recruitment” service that connects directly to specialty hospital networks.

- A “Cryo-Shipping Logistics” service to manage the complex cold chain requirements.

- A “Real-Time Patient Adherence Monitoring” service that uses data from smart devices to support patients at home.

This is the ultimate expression of organizational agility. It transforms the business model itself. Value creation shifts from the slow, arduous process of owning and operating monolithic, end-to-end pipelines to mastering the art of orchestrating a dynamic ecosystem of both internal and external AI services. The most valuable corporate skill will no longer be building everything in-house; it will be the ability to rapidly identify, vet, integrate, and manage the best-in-class services—wherever they may come from—to create novel, efficient, and patient-centric value streams.

The companies that master this composable, “snackable” model will be the ones who not only survive the immense pressures facing the industry but also capture the lion’s share of the value in a global AI in pharma market projected to soar to $25.73 billion by 2030. They will be the agile, intelligent, and ultimately dominant pharmaceutical enterprises of the future.

Conclusion: The Dawn of the Agile Pharma Enterprise

The pharmaceutical industry is at an inflection point. The old models of operation, characterized by decade-long timelines, multi-billion-dollar costs, and monolithic technology stacks, are no longer tenable. The relentless pressure of Eroom’s Law demands a new way of thinking, a new way of working, and a new technological foundation. The shift from the rigid, slow, and fragile AI monolith to an agile, resilient, and innovative ecosystem of “Snackable AI” is not just a recommended upgrade; it is an essential strategic evolution for survival and leadership.

By embracing a modular, microservices-based architecture, you are not simply modernizing your IT infrastructure. You are fundamentally re-architecting your business for speed. You are empowering small, autonomous teams to innovate rapidly, adopting the best new technologies as they emerge, and building a culture of agility and ownership. You are transforming every part of your value chain, from using agentic AI to create autonomous discovery labs, to deploying precision analytics that make clinical trials faster and more patient-centric, to building sentient supply chains that are both efficient and resilient.

This approach allows you to turn the overwhelming flood of patent and competitive data into a strategic weapon, using specialized AI services fueled by platforms like DrugPatentWatch to achieve a level of market awareness that was previously unimaginable. While this journey requires a sophisticated approach to governance to manage the risks of fragmentation, bias, and security, the rewards are immense. The ultimate destination is the Composable Pharma Enterprise—an organization so agile it can assemble and reassemble its capabilities on the fly to meet the ever-changing demands of science, medicine, and the market.

The companies that hesitate, that cling to the perceived safety of their legacy monolithic systems, will inevitably fall behind. They will be outmaneuvered, out-innovated, and ultimately rendered obsolete. The future belongs to those who have the courage to dismantle the old and embrace a new model where less is truly more. The dawn of the agile pharma enterprise is here, and it will be built one “snackable” piece at a time.

Key Takeaways

- The Monolith is a Dead End: Large, all-in-one AI platforms are too slow, rigid, and expensive to maintain. They create technology lock-in and are fundamentally incompatible with the speed of modern AI innovation.

- Embrace “Snackable AI”: The future is a modular, microservices-based architecture. This approach uses small, independent, and specialized AI services that can be developed, deployed, and scaled individually, providing massive gains in agility, resilience, and cost-efficiency.

- Architecture Shapes Culture: A microservices architecture is not just a technical choice; it’s a cultural one. It enables small, autonomous teams, fostering a culture of ownership, speed, and accountability that is essential for attracting and retaining top talent.

- Targeted ROI Across the Value Chain: “Snackable AI” allows you to deploy specific solutions for high-value problems, from accelerating preclinical discovery with agentic AI to cutting clinical trial enrollment times by 10-15% and reducing manufacturing changeover times by over 20%.

- Turn Patent Data into a Weapon: Combine specialized AI microservices with comprehensive data platforms like DrugPatentWatch to create a real-time competitive intelligence engine, enabling proactive strategy instead of reactive analysis.

- Governance is Non-Negotiable: A decentralized development model must be paired with strong, centralized governance. An AI Center of Excellence (CoE) is critical for setting standards, managing risk, and ensuring quality, security, and compliance across the ecosystem.

- Prioritize Bias Mitigation: In a modular world, the risk of deploying a biased algorithm increases. Centralized governance must enforce rigorous, standardized bias audits for every service before it goes into production to ensure health equity.

- Migrate Incrementally: Do not attempt a “big bang” rewrite. Adopt a phased migration strategy, carving out one piece of functionality from your legacy monolith at a time to build momentum and demonstrate value.

- Invest in People and Process: A successful transformation is 80% about culture and change management. Invest heavily in upskilling your existing workforce and fostering a new, more agile way of working.

- The Future is Composable: The ultimate goal is to become a “Composable Pharma Enterprise,” able to dynamically assemble and reconfigure AI-powered business capabilities to respond to market needs with unprecedented speed.

Frequently Asked Questions (FAQ)

1. We’ve already invested millions in a monolithic AI platform. What’s the realistic path forward?

This is a common and critical question. The answer is not to “rip and replace,” which is prohibitively risky and expensive. The most effective strategy is an incremental approach often called the “strangler fig” pattern. Instead of trying to extinguish the monolith overnight, you strategically grow new services around it, gradually choking off its functions. Start by identifying the areas of the monolith that are causing the most business pain or have the highest potential for immediate ROI. Carve out that single function and rebuild it as your first microservice. For example, if your monolithic platform’s clinical site selection module is slow and inaccurate, make an AI-powered site selection microservice your first project. Once it’s successful and delivering value, you redirect all requests for that function to the new service and decommission that part of the monolith. You repeat this process, slice by slice, gradually shrinking the monolith’s responsibilities until it can be safely retired. This pragmatic approach minimizes risk, allows you to learn as you go, and builds business confidence with each successful step.

2. How do we measure the ROI of a single “snackable” AI microservice to justify the investment?

The beauty of a “snackable” approach is that it makes ROI measurement far more direct and transparent than with a monolith. The key is to define clear, measurable, business-centric Key Performance Indicators (KPIs) for each service before you build it. These should not be technical metrics like “CPU utilization”; they must be metrics the business understands and values. For example:

- For a Predictive Maintenance Service: The primary KPI is “reduction in unplanned machine downtime (in hours per year),” which translates directly to cost savings and increased production output.

- For a Patient Recruitment Service: The KPI could be “reduction in average patient screening time (in days)” or “increase in the percentage of sites meeting enrollment targets.”

- For a Demand Forecasting Service: The KPI is “improvement in forecast accuracy (e.g., reduction in Mean Absolute Percentage Error),” which directly impacts inventory carrying costs and the risk of stockouts.

By defining these specific, quantifiable business outcomes upfront, you can clearly track the performance of each “snack” and demonstrate its direct contribution to the bottom line.

3. What is the single biggest cultural barrier to adopting a microservices approach, and how do we overcome it?

The single biggest cultural barrier is the shift from traditional, siloed, functional departments (e.g., the “Discovery” department, the “Clinical Ops” department, the “IT” department) to durable, cross-functional, product-oriented teams. In a microservices world, you don’t have a temporary project team that builds a service and then hands it off to IT to operate. Instead, you have a small, persistent team that owns a business capability (e.g., the “ADMET Prediction Service”) end-to-end, for its entire lifecycle. This team includes the scientists who need the service, the data scientists who build the models, and the engineers who operate the infrastructure. Overcoming the inertia of traditional organizational structures is the hardest part. The solution requires unequivocal and highly visible executive sponsorship. Leadership must constantly communicate the “why” behind the change, restructure teams and reporting lines to support this new model, and, most importantly, empower these small teams with genuine autonomy and accountability for their outcomes.

4. How do we prevent our “snackable” ecosystem from becoming an ungovernable mess of hundreds of disconnected services?

This is a very real risk and the primary reason why undisciplined microservices initiatives fail. The antidote to chaos is a combination of strong governance and robust tooling, orchestrated by an AI Center of Excellence (CoE). Governance cannot be an afterthought; it must be designed in from day one. The CoE’s role is to create the “paved road” that makes it easy for teams to do the right thing and hard to do the wrong thing. A critical piece of this is implementing a comprehensive service catalog, as Atlassian did with their “Microscope” tool. This catalog is the single source of truth for the entire ecosystem, documenting every service, its owner, its API, its dependencies, and its operational health. It provides the visibility needed to manage complexity. The CoE also enforces standards through automation—for example, by building CI/CD pipelines that automatically run security scans, bias checks, and compliance validations before any new service can be deployed. This combination of visibility and automated guardrails is what allows you to scale innovation without scaling chaos.

5. With a modular approach, where does accountability lie when something goes wrong? For instance, if a drug candidate fails based on a series of microservice predictions.

This is a sophisticated question that gets to the heart of operationalizing a microservices culture. Accountability becomes layered. The team that owns a specific microservice (e.g., the “Toxicity Prediction Service”) is accountable for the performance, accuracy, and uptime of that individual service. They are responsible for ensuring their model meets the validation standards set by the CoE. However, a “Product Owner” or “Program Lead” must have end-to-end accountability for the overall business outcome (e.g., the success of the preclinical candidate pipeline). When a failure occurs, the goal is not to assign blame to a single team but to perform a blameless root cause analysis. This requires robust logging and traceability across all services, allowing you to reconstruct the entire chain of events. Was the failure due to an inaccurate prediction from one service? Or was it due to a faulty integration between two otherwise correct services? This shifts the focus from blaming a single system to analyzing and improving the complex interactions within the entire ecosystem, which is a more mature and effective way to manage risk in a distributed world.

References

- Patenting Drugs Developed with Artificial Intelligence: Navigating the Legal Landscape, accessed July 28, 2025, https://www.drugpatentwatch.com/blog/patenting-drugs-developed-with-artificial-intelligence-navigating-the-legal-landscape/

- Software Development Services for Startups | Relevant, accessed July 28, 2025, https://relevant.software/blog/ai-in-pharma/

- AI in Pharma and Biotech: Market Trends 2025 and Beyond, accessed July 28, 2025, https://www.coherentsolutions.com/insights/artificial-intelligence-in-pharmaceuticals-and-biotechnology-current-trends-and-innovations

- Microservices vs. monolithic architecture | Atlassian, accessed July 28, 2025, https://www.atlassian.com/microservices/microservices-architecture/microservices-vs-monolith

- Monolith vs. Microservices: Choosing the Right Architecture – Ideas2IT, accessed July 28, 2025, https://www.ideas2it.com/blogs/monolithic-to-microservices

- AI Pharma: Transforming Drug Discovery in 2025 – Research AIMultiple, accessed July 28, 2025, https://research.aimultiple.com/ai-pharma/

- Large Language Model Agent for Modular Task Execution in Drug …, accessed July 28, 2025, https://www.biorxiv.org/content/10.1101/2025.07.02.662875v1

- RECEPTOR.AI | AI-Driven Drug Discovery, accessed July 28, 2025, https://www.receptor.ai/

- AI in Pharma: Use Cases, Success Stories, and Challenges in 2025, accessed July 28, 2025, https://scw.ai/blog/ai-in-pharma/

- Generative AI in Pharma: The Most Promising Use Cases [2025], accessed July 28, 2025, https://masterofcode.com/blog/generative-ai-chatbots-in-healthcare-and-pharma

- Top 10 AI Agent Useful Case Study Examples (2025) – Creole Studios, accessed July 28, 2025, https://www.creolestudios.com/real-world-ai-agent-case-studies/

- Revolutionizing Clinical Study Design: The Role of AI and Analytics …, accessed July 28, 2025, https://www.iqvia.com/blogs/2025/06/revolutionizing-clinical-study-design

- Performance in clinical development with AI & ML | McKinsey, accessed July 28, 2025, https://www.mckinsey.com/industries/life-sciences/our-insights/unlocking-peak-operational-performance-in-clinical-development-with-artificial-intelligence

- Harnessing AI to Streamline Clinical Trials, Optimize Pharmacy, and …, accessed July 28, 2025, https://www.pharmacytimes.com/view/harnessing-ai-to-streamline-clinical-trials-optimize-pharmacy-and-personalize-cancer-treatment

- How AI is Revolutionizing Drug Development and Study Design, accessed July 28, 2025, https://www.modernvivo.com/whitepapers/ai-optimization-and-streamlining-drug-development

- IoT and AI in Healthcare: A Prescription for Innovation – BrightTALK, accessed July 28, 2025, https://www.brighttalk.com/webcast/17202/610811?utm_source=brighttalk-portal&utm_medium=web&utm_campaign=channel-feed&player-preauth=JhnutxWw963KntITKprqEbqFhCDrzLnRuyZc2WRMLWA%3D

- AI in Pharma Supply Chains: 3 Trends Defining 2025 – Pharma IQ, accessed July 28, 2025, https://www.pharma-iq.com/manufacturing/articles/ai-driven-productivity-gains-transforming-pharma-supply-chain-efficiency

- AI meets pharma for a smarter supply chain, accessed July 28, 2025, https://www.scmr.com/article/ai-meets-pharma-for-a-smarter-supply-chain

- Agentic AI in the Pharma Industry [5 Case Studies] [2025 …, accessed July 28, 2025, https://digitaldefynd.com/IQ/agentic-ai-in-the-pharma-industry/

- Using AI Patent Analytics for Competitive Advantage – PatentPC, accessed July 28, 2025, https://patentpc.com/blog/using-ai-patent-analytics-for-competitive-advantage

- Role of AI in Pharma Market Intelligence – AMPLYFI, accessed July 28, 2025, https://amplyfi.com/blog/role-of-ai-in-pharma-market-intelligence/

- AI in Pharmaceutical Competitive Intelligence: Leveraging Human-AI Collaboration for Maximizing Success – BiopharmaVantage, accessed July 28, 2025, https://www.biopharmavantage.com/ai-pharmaceutical-competitive-intelligence

- DrugPatentWatch | Software Reviews & Alternatives – Crozdesk, accessed July 28, 2025, https://crozdesk.com/software/drugpatentwatch

- DrugPatentWatch 2025 Company Profile: Valuation, Funding & Investors | PitchBook, accessed July 28, 2025, https://pitchbook.com/profiles/company/519079-87

- DrugPatentWatch Review – Crozdesk, accessed July 28, 2025, https://crozdesk.com/software/drugpatentwatch/review

- Make Better Decisions with DrugPatentWatch, accessed July 28, 2025, https://www.drugpatentwatch.com/pricing/

- Risks and remedies for artificial intelligence in health care | Brookings, accessed July 28, 2025, https://www.brookings.edu/articles/risks-and-remedies-for-artificial-intelligence-in-health-care/

- Artificial intelligence for optimizing benefits and minimizing risks of pharmacological therapies: challenges and opportunities – Frontiers, accessed July 28, 2025, https://www.frontiersin.org/journals/drug-safety-and-regulation/articles/10.3389/fdsfr.2024.1356405/full

- ‘Bias in, bias out’: Tackling bias in medical artificial intelligence – Yale School of Medicine, accessed July 28, 2025, https://medicine.yale.edu/news-article/bias-in-bias-out-yale-researchers-pose-solutions-for-biased-medical-ai/

- How IQVIA Addresses Biases in Healthcare AI, accessed July 28, 2025, https://www.iqvia.com/locations/united-states/blogs/2024/10/how-iqvia-addresses-biases-in-healthcare-ai

- Appinventiv’s AI Facts Label for MedTech FDA Compliance, accessed July 28, 2025, https://appinventiv.com/press-release/appinventiv-launches-ai-facts-label-for-medtech-fda-compliance/

- Artificial Intelligence and Regulatory Realities in Drug Development: A Pragmatic View, accessed July 28, 2025, https://globalforum.diaglobal.org/issue/april-2025/artificial-intelligence-and-regulatory-realities-in-drug-development-a-pragmatic-view/

- AI in Pharmaceuticals: Benefits, Challenges, and Insights | DataCamp, accessed July 28, 2025, https://www.datacamp.com/blog/ai-in-pharmaceuticals

- Regulating the Use of AI in Drug Development: Legal Challenges …, accessed July 28, 2025, https://www.fdli.org/2025/07/regulating-the-use-of-ai-in-drug-development-legal-challenges-and-compliance-strategies/

- AI Risk Management: Effective Strategies and Framework, accessed July 28, 2025, https://hiddenlayer.com/innovation-hub/ai-risk-management-effective-strategies-and-framework/

- 7 Serious AI Security Risks and How to Mitigate Them – Wiz, accessed July 28, 2025, https://www.wiz.io/academy/ai-security-risks

- Exploring the Main Privacy Concerns Surrounding Artificial Intelligence in Healthcare: Risks and Mitigation Strategies | Simbo AI – Blogs, accessed July 28, 2025, https://www.simbo.ai/blog/exploring-the-main-privacy-concerns-surrounding-artificial-intelligence-in-healthcare-risks-and-mitigation-strategies-641066/

- AI in Healthcare: Security and Privacy Concerns – Lepide, accessed July 28, 2025, https://www.lepide.com/blog/ai-in-healthcare-security-and-privacy-concerns/

- AI in Pharma: Key Takeaways From MIT Technology Review – Dataiku blog, accessed July 28, 2025, https://blog.dataiku.com/key-takeaways-mit-technology-review

- Use of AI in Pharmaceutical Industry—Applications and Case Study – CodeIT, accessed July 28, 2025, https://codeit.us/blog/ai-in-pharma

- Moderna: Democratizing Artificial Intelligence – Case – Faculty & Research, accessed July 28, 2025, https://www.hbs.edu/faculty/Pages/item.aspx?num=66910

- From Data to Drugs: The Role of Artificial Intelligence in Drug Discovery – Wyss Institute, accessed July 28, 2025, https://wyss.harvard.edu/news/from-data-to-drugs-the-role-of-artificial-intelligence-in-drug-discovery/

- A scoping review of the governance of federated learning in healthcare – PMC, accessed July 28, 2025, https://pmc.ncbi.nlm.nih.gov/articles/PMC12246253/